In today's fast-paced digital landscape, real-time data processing has become a cornerstone of modern business operations. Apache Kafka is a leading distributed streaming platform that empowers organizations to manage, process, and analyze massive volumes of data in real time with unparalleled efficiency.

As Kafka continues to solidify its position as the backbone of countless data-driven initiatives, the demand for seamless integration solutions has never been greater. Nussknacker is a powerful low-code tool designed to streamline the integration process and unlock the full potential of Kafka's capabilities.

This article will explore how Nussknacker effortlessly integrates with Apache Kafka, including its seamless compatibility with Kafka's Schema Registry. Whether you're a seasoned Kafka enthusiast or just starting to explore the world of real-time data processing, you'll discover how Nussknacker simplifies the integration journey, enabling you to harness the full power of Kafka with minimal effort.

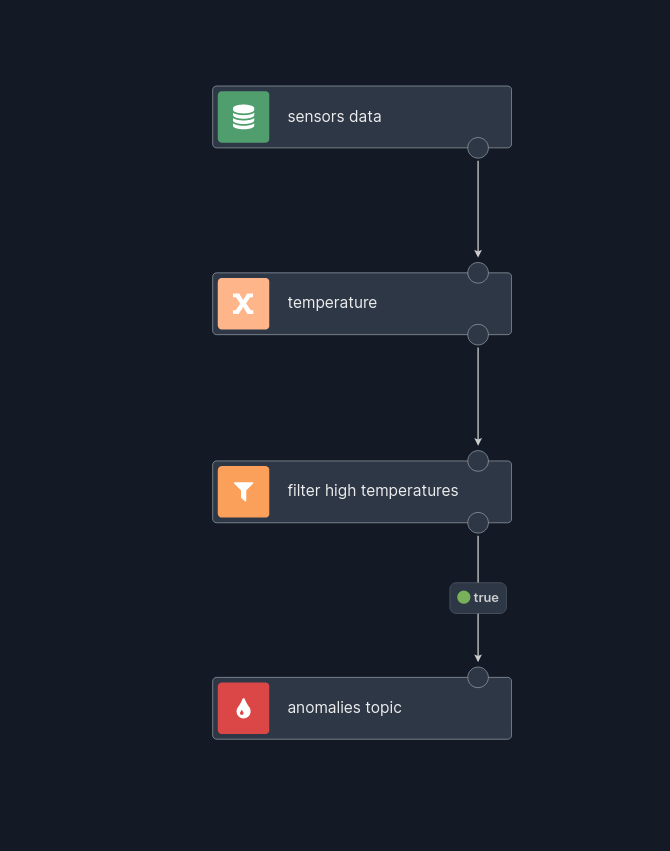

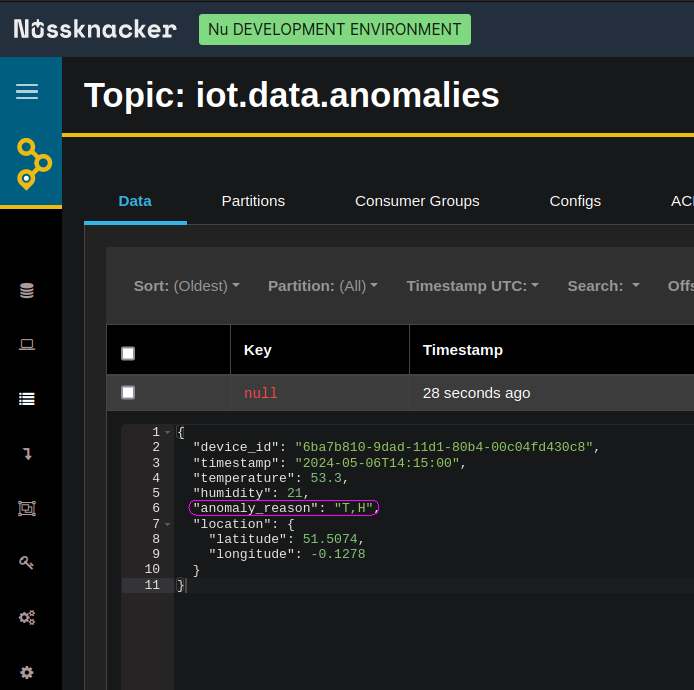

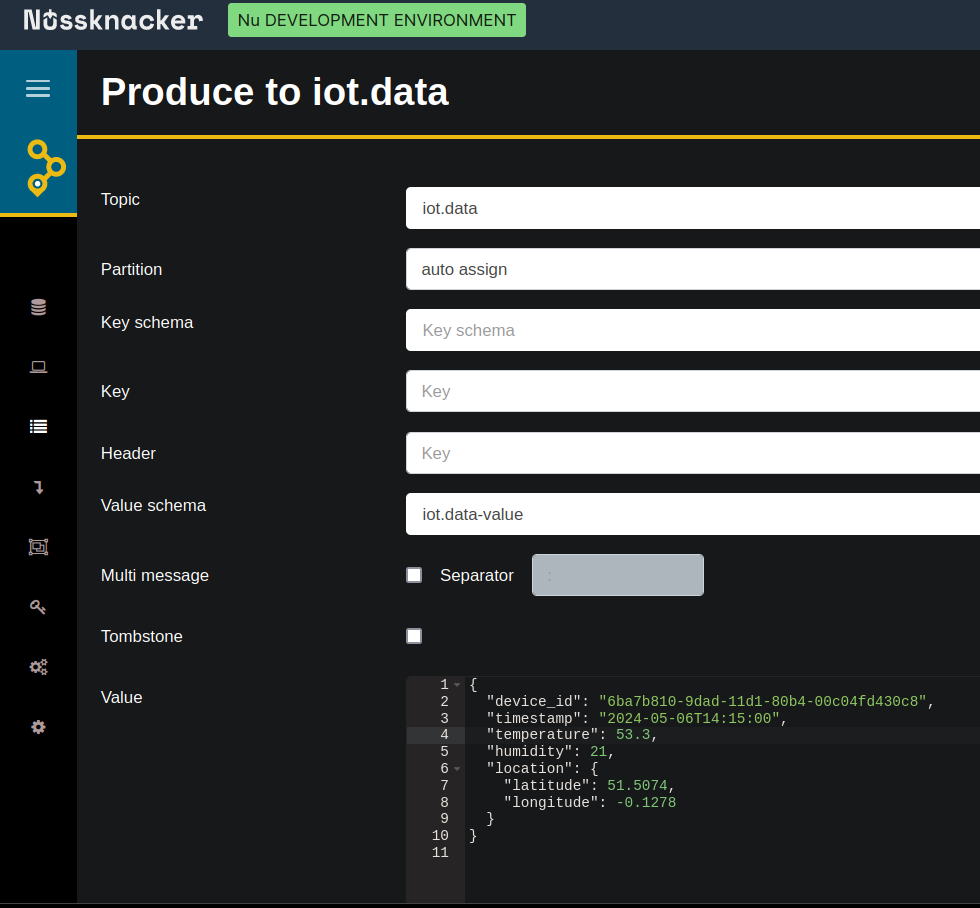

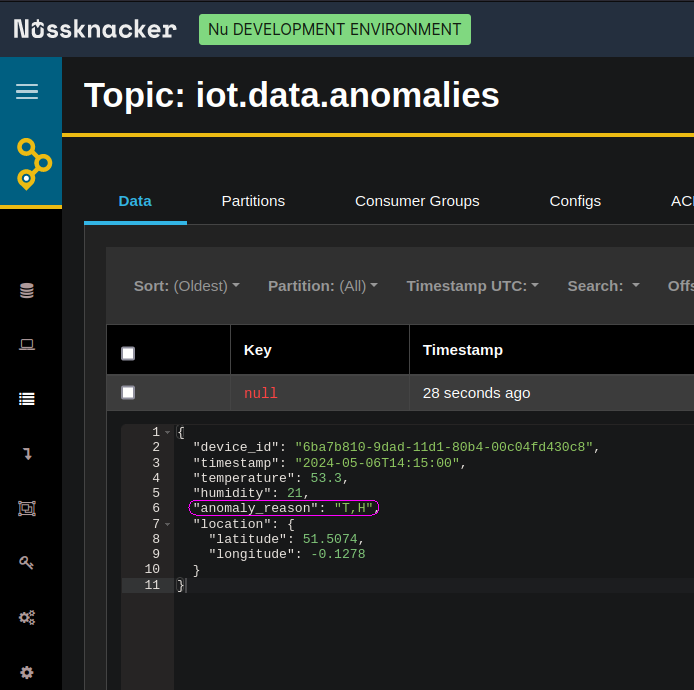

Join us on this journey to unlock the true potential of Kafka integration with the power of Nussknacker. Please stay tuned as we illustrate these concepts through an exciting Internet of Things example, showcasing the real-world application of Kafka integration with Nussknacker.

Background recap

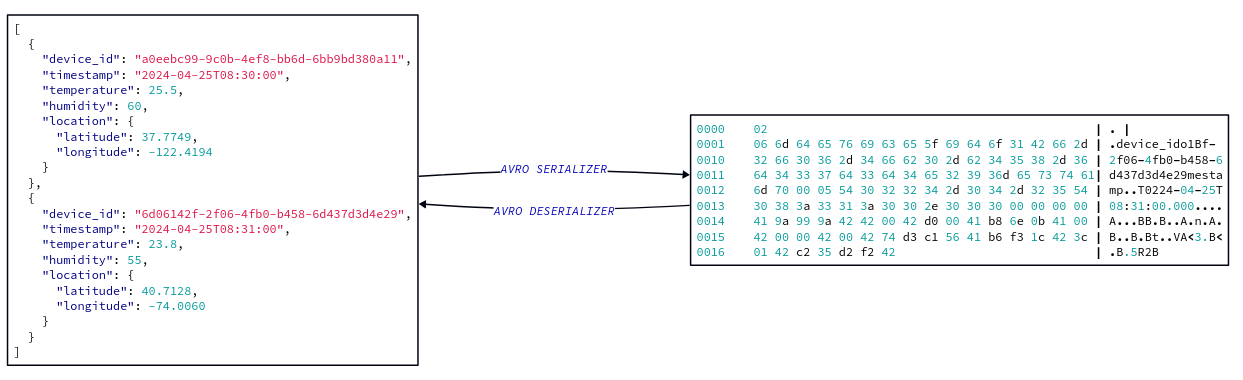

An Avro schema defines the structure of data in Avro format, specifying the fields and their data types within a record. It's expressed in JSON format and enables efficient serialization and deserialization of data in binary form, reducing the size of messages transmitted over the network. This results in reduced network bandwidth usage and improved overall performance, especially in high-throughput Kafka deployments where efficient data transfer is critical. This can be summarized in the following diagram:

Fun fact: The original Apache Avro logo was from the defunct British aircraft manufacturer Avro.

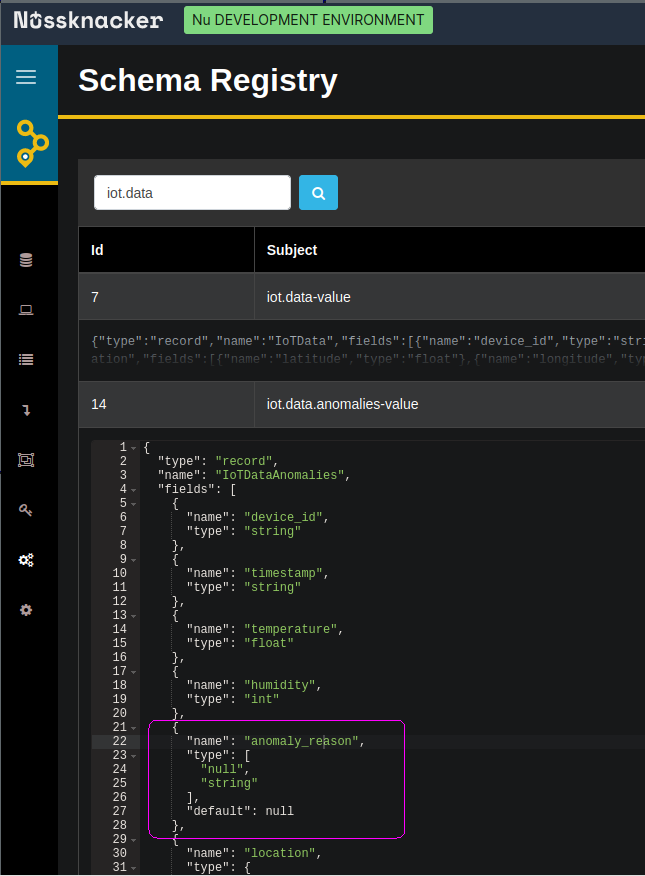

Schema registry is a centralized repository for managing Avro schemas in distributed systems such as Apache Kafka. It stores, retrieves, and manages versioned Avro schemas by providing a unified interface for schema registration. The schema registry enables schema evolution, ensuring backward and forward compatibility between different versions of schemas. By enforcing schema compatibility checks, the schema registry improves data interoperability and reliability in streaming data pipelines. Let us emphasize that schema registry is a general concept (specification). In what follows, I will focus on a specific implementation which is Confluent's solution: Schema Registry. Keep in mind, however, that the configuration of Kafka: “schema.registry.url” accepts any implementation of the schema registry API specification.

The flow can be summarized as follows:

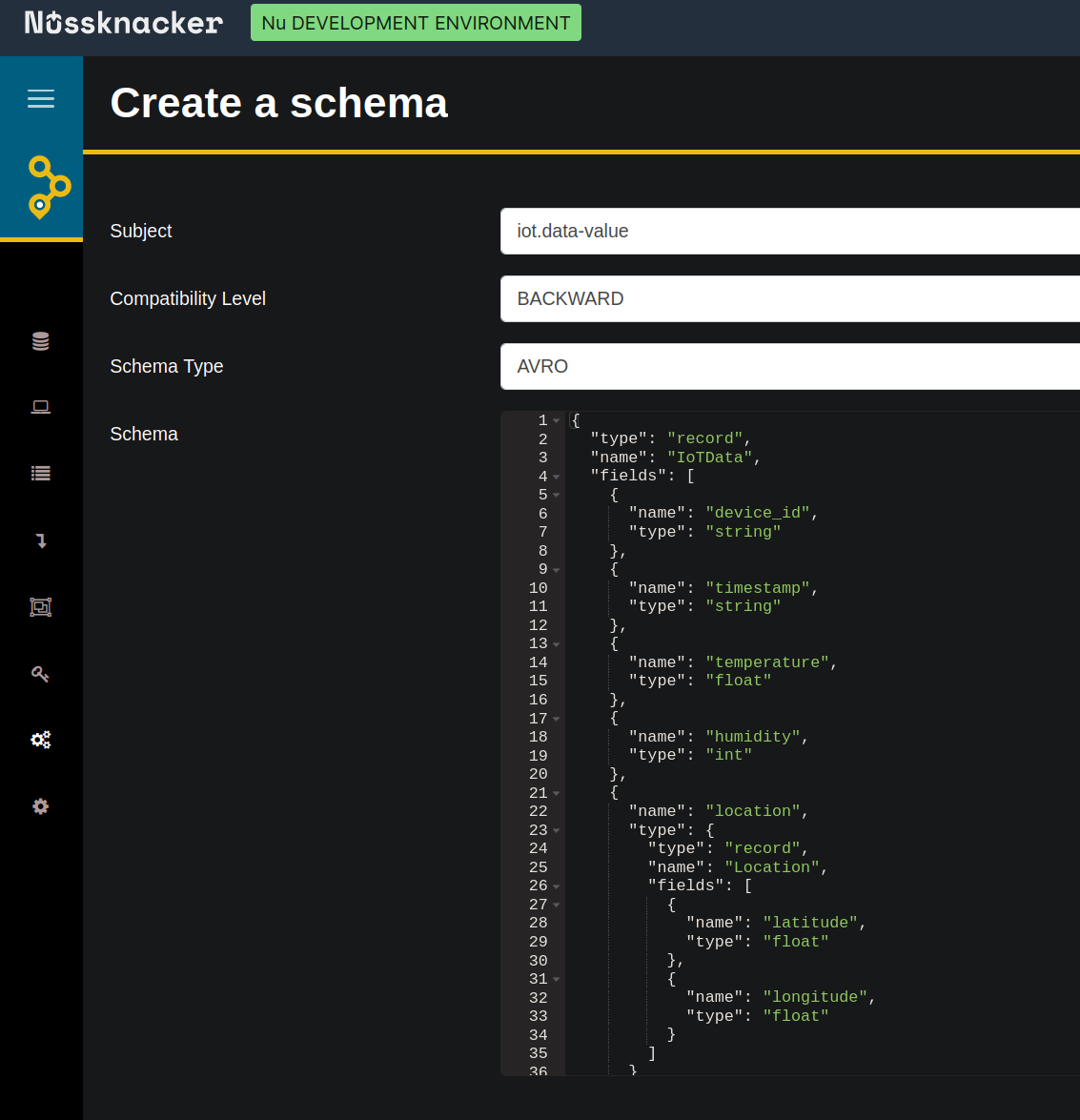

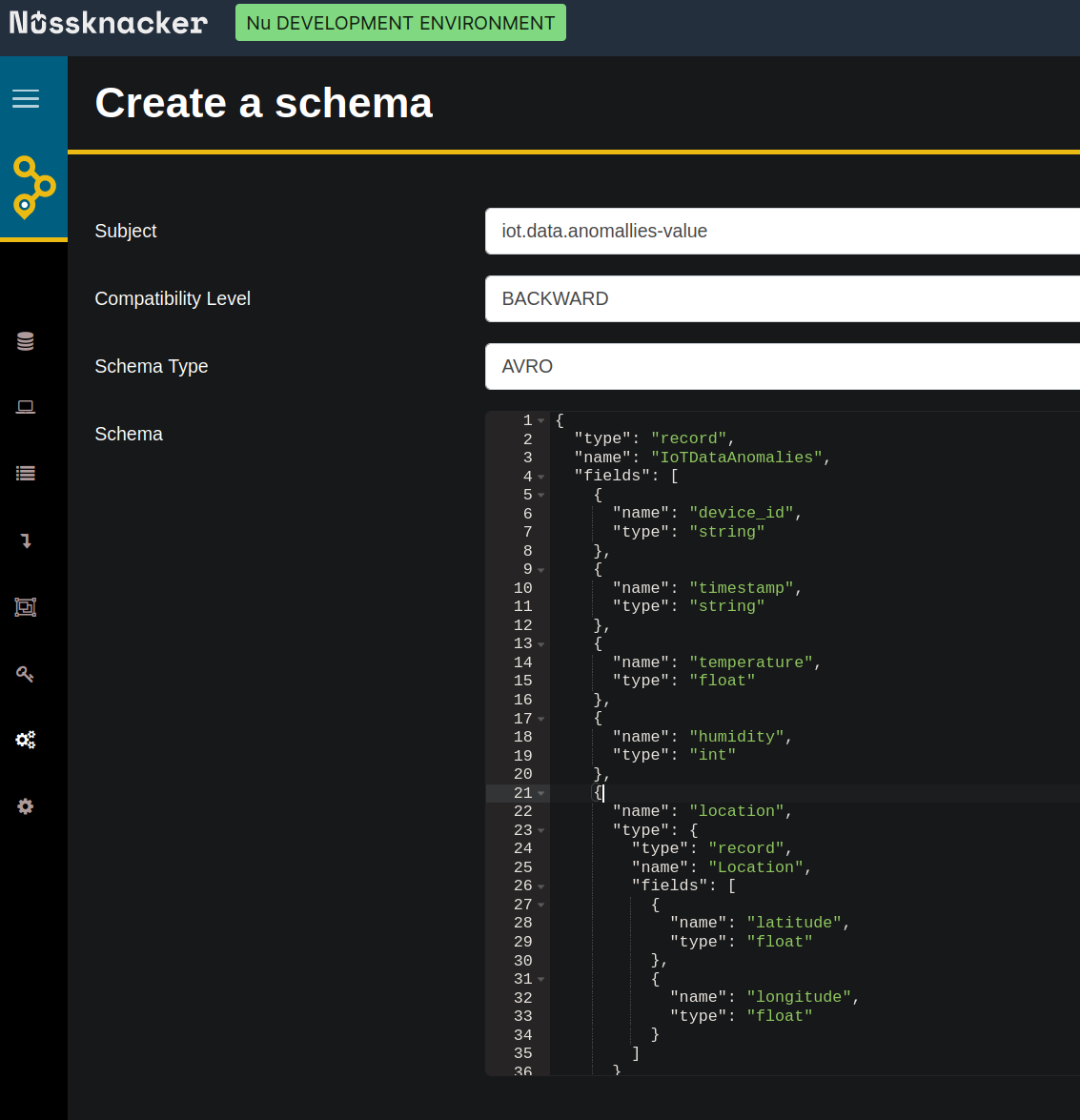

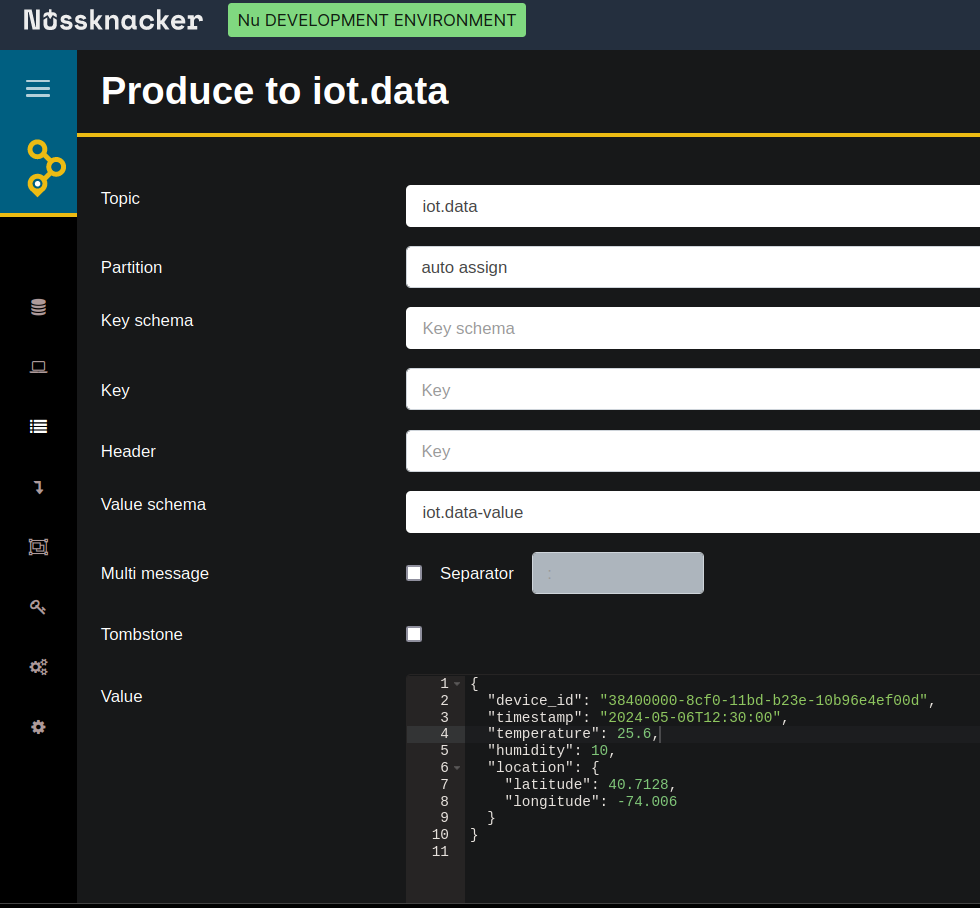

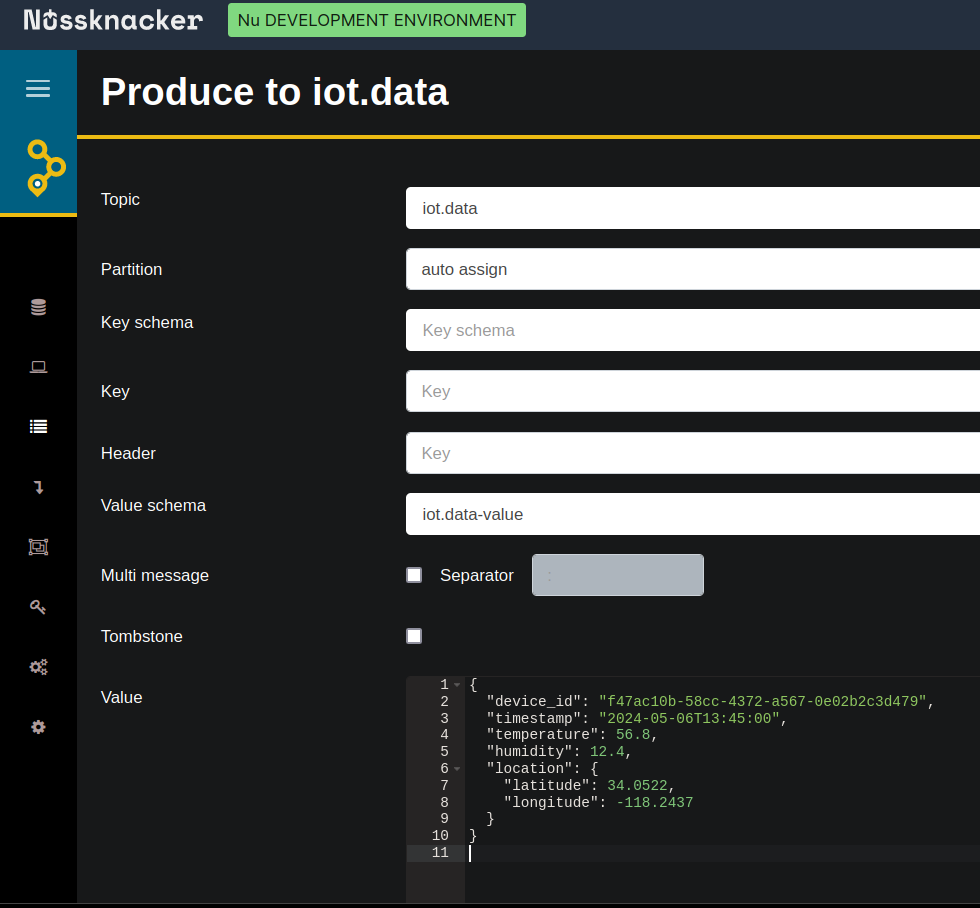

- First, the Avro schema used to encode messages is registered in the Schema Registry.

- The producer creates Avro-encoded messages and sends them to the Kafka broker along with the registered schema ID. This schema ID is typically sent as part of the message header or message metadata.

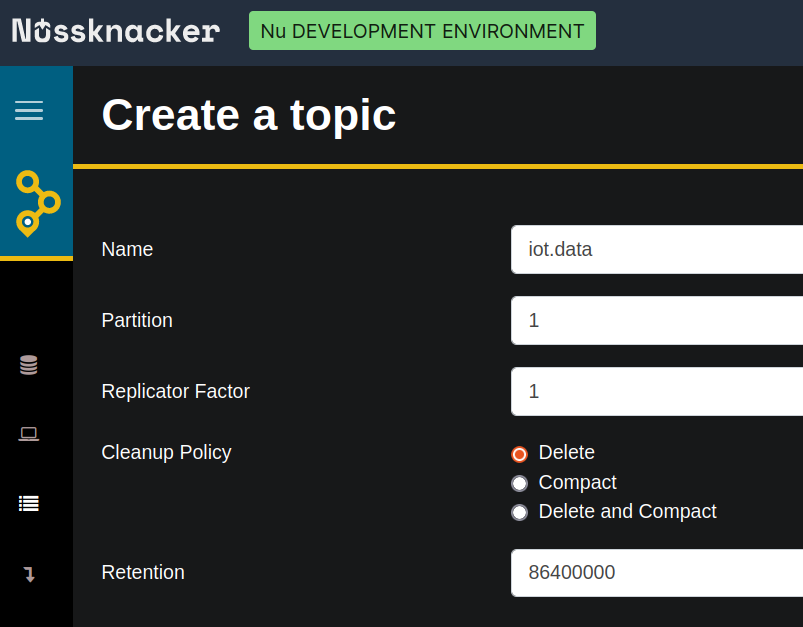

- The Kafka broker stores the Avro-encoded message along with the associated schema ID in the specified topic.

- The consumer reads the Avro-encoded message along with the associated schema ID from the Kafka topic.

- The consumer directly calls the schema registry API to retrieve the schema corresponding to the schema ID extracted from the message.

- The schema registry responds to the consumer's request by providing the schema associated with the schema ID.

- Using the retrieved schema, the consumer deserializes the Avro-encoded message back into a human-friendly format (e.g. JSON).

What about JSON schemas? If you are not concerned about data compression on your topic and would prefer to use JSON schema instead, Nussknacker will meet your needs.

The integrated AKHQ panel allows you to create JSON schemas as well!