Data Typing and Schemas Handling

Overview

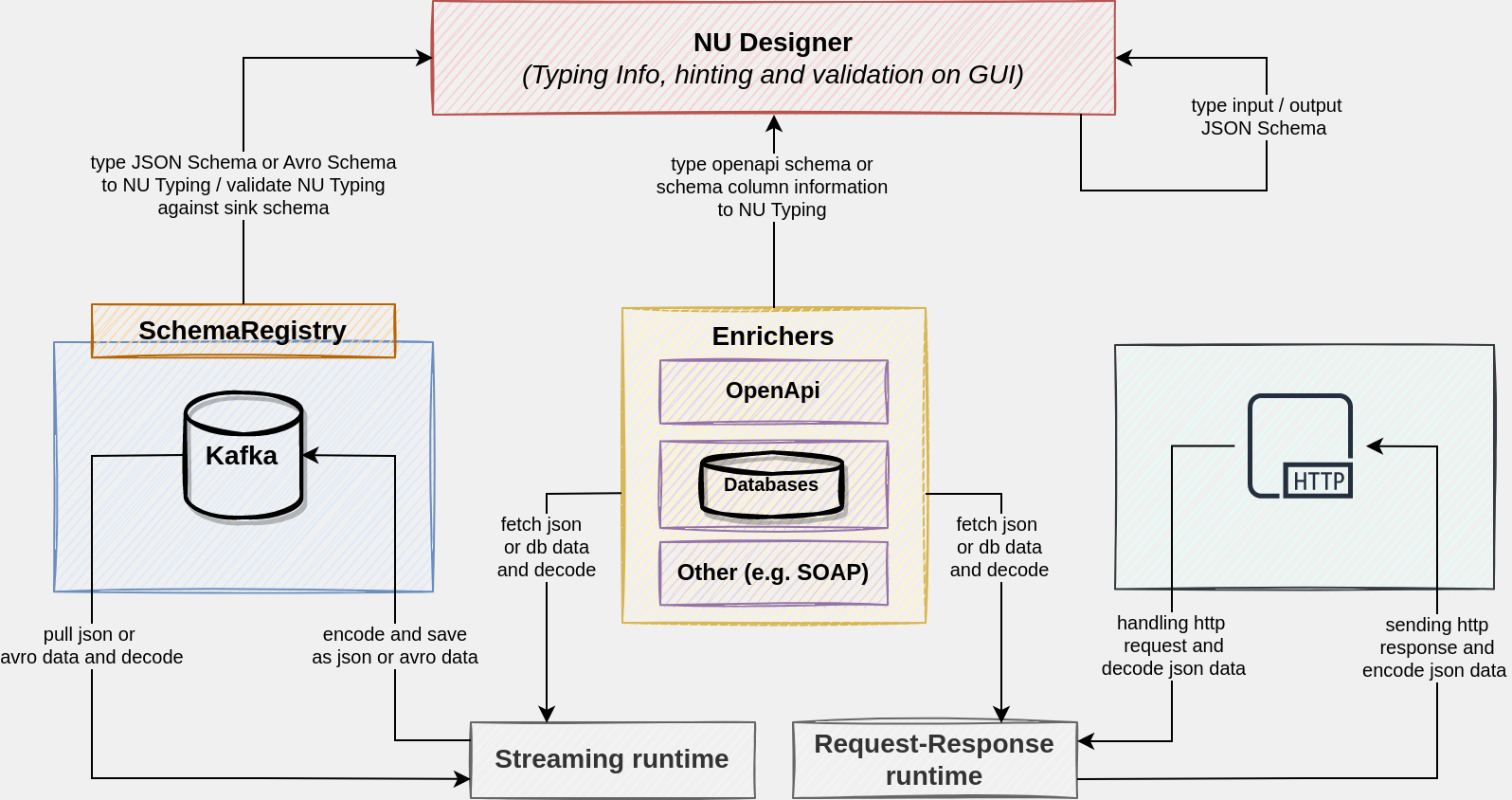

Nussknacker as a platform integrates diverse data sources, e.g. kafka topic or http request, and also allows to enrich data using e.g. OpenAPI or databases. These integrations can return several types of data like JSON, Binary, and DB data. In each case format of these data is described in a different way:

- Request-response inputs and outputs are described by JSON Schema, stored in Nussknacker scenario's properties

- Source kafka with JSON data is described by JSON Schema, stored in the schema registry

- Source kafka with binary data is described by Avro Schema, stored in the schema registry

- OpenAPI enricher uses JSON data described by OpenAPI Schema

- Database data are described by JDBC metadata, that contain column information

To provide consistent and proper support for these formats Nussknacker converts meta-information about data to its

own Typing Information, which is used on the Designer's part to hint and validate the data. Each part of the diagram

is statically validated and typed on an ongoing basis.

Avro Schema

We support Avro Schema in version: 1.11.0. Avro is available only

on Streaming. You need

Schema Registry if you want to use Avro Schema.

Source conversion mapping

Primitive types

| Avro Schema | Java type | Comment |

|---|---|---|

| null | null | |

| string | String / Char | String schema is by default represented by java UTF-8 String. Using advance config AVRO_USE_STRING_FOR_STRING_TYPE=false, you can change it to Char. |

| boolean | Boolean | |

| int | Integer | 32bit |

| long | Long | 64bit |

| float | Float | single precision |

| double | Double | double precision |

| bytes | ByteBuffer |

Logical types

Conversion at source to the specific type means that behind the scene Nussknacker converts primitive type to logical type - java objects, consequently, the end-user has access to methods of these objects.

| Avro Schema | Java type | Sample | Comment |

|---|---|---|---|

| decimal (bytes or fixed) | BigDecimal | ||

| uuid (string) | UUID | ||

| date (int) | LocalDate | 2021-05-17 | Timezone is not stored. |

| time - millisecond precision (int) | LocalTime | 07:34:00.12345 | Timezone is not stored. |

| time - microsecond precision (long) | LocalTime | 07:34:00.12345 | Timezone is not stored. |

| timestamp - millisecond precision (long) | Instant | 2021-05-17T05:34:00Z | Timestamp (millis since 1970-01-01) in human readable format. |

| timestamp - microsecond precision (long) | Instant | 2021-05-17T05:34:00Z | Timestamp (millis since 1970-01-01) in human readable format. |

Complex types

| Avro Schema | Java type | Comment |

|---|---|---|

| array | list | |

| map | map | Key - value map, where key is always represented by String. |

| record | record | |

| enums | org.apache.avro.generic.GenericData.EnumSymbol | |

| fixed | org.apache.avro.generic.GenericData.Fixed | |

| union | Any of the above types | It can be any of the defined type in union. |

Sink validation & encoding

| Java type | Avro Schema | Comment |

|---|---|---|

| null | null | |

| String | string | |

| Boolean | boolean | |

| Integer | int | |

| Long | long | |

| Float | float | |

| Double | double | |

| ByteBuffer | bytes | |

| list | array | |

| map | map | |

| map | record | |

| org.apache.avro.generic.GenericRecord | record | |

| org.apache.avro.generic.GenericData.EnumSymbol | enums | |

| String | enums | On the Designer we allow to pass Typing Information String, but we can't verify whether value is a valid Enum's symbol. |

| org.apache.avro.generic.GenericData.Fixed | fixed | |

| ByteBuffer | fixed | On the Designer we allow to pass Typing Information ByteBuffer, but we can't verify whether value is a valid Fixed element. |

| String | fixed | On the Designer we allow to pass Typing Information String, but we can't verify whether value is a valid Fixed element. |

| BigDecimal | decimal (bytes or fixed) | |

| ByteBuffer | decimal (bytes or fixed) | |

| UUID | uuid (string) | |

| String | uuid (string) | On the Designer we allow to pass Typing Information String, but we can't verify whether value is a valid UUID. |

| LocalDate | date (int) | |

| Integer | date (int) | |

| LocalTime | time - millisecond precision (int) | |

| Integer | time - millisecond precision (int) | |

| LocalTime | time - microsecond precision (long) | |

| Long | time - microsecond precision (long) | |

| Instant | timestamp - millisecond precision (long) | |

| Long | timestamp - millisecond precision (long) | |

| Instant | timestamp - microsecond precision (long) | |

| Long | timestamp - microsecond precision (long) | |

| Any matching type from the list of types in the union schema | union | Read more about validation modes. |

If at runtime value cannot be converted to an appropriate logic schema (e.g. "notAUUID" cannot be converted to proper UUID), then an error will be reported.

JSON Schema

We support JSON Schema in version: Draft 7 without:

- Numbers with a zero fractional part (e.g.

1.0) as a proper value on decoding (deserialization) for integer schema - Recursion schemas

- Anchors

JSON Schema is available on Streaming and Request-Response. To integrate with JSON on streaming we use Schema Registry. On the other hand, we have Request-Response where schemas are stored in scenario properties.

Source conversion mapping

| JSON Schema | Java type | Comment |

|---|---|---|

| null | null | |

| string | String | UTF-8 |

| boolean | Boolean | |

| integer | Long | 64bit |

| number | BigDecimal | |

| enum | String | |

| array | list |

String Format

| JSON Schema | Java type | Sample | Comment |

|---|---|---|---|

| date-time | ZonedDateTime | 2021-05-17T07:34:00+01:00 | |

| date | LocalDate | 2021-05-17 | Timezone is not stored |

| time | LocalTime | 07:34:00.12345 | Timezone is not stored |

Objects

| object configuration | Java type | Comment |

|---|---|---|

| object with properties | map | Map[String, _] |

object with properties and enabled additionalProperties | map | Additional properties are available at runtime. Similar to Map[String, _]. |

object without properties and additionalProperties: true | map | Map[String, Unknown] |

object without properties and additionalProperties: {"type": "integer"} | map | Map[String, Integer] |

We support additionalProperties, but additional fields won't be available in the hints on the Designer. To get

an additional field you have to do #inpute.get("additional-field"), but remember that result of this expression is

depends on additionalProperties type configuration and can be Unknown.

Schema Composition

| type | Java type | Comment |

|---|---|---|

| oneOf | Any from the available list of the schemas | We treat it just like a union. |

| anyOf | Any from the available list of the schemas | We treat it just like a union. |

Sink validation & encoding

| Java type | JSON Schema | Comment |

|---|---|---|

| null | null | |

| String | string | |

| Boolean | boolean | |

| Integer | integer | |

| Long | integer | |

| Float | number | |

| Double | number | |

| BigDecimal | number | |

| list | array | |

| map | object | |

| String | enum | On the Designer we allow to pass Typed[String], but we can't verify whether value is a valid Enum's symbol. |

| Any matching type from the list of types in the union schema | schema composition: oneOf, anyOf | Read more about validation modes. |

Properties not supported on the Designer

| JSON Schema | Properties | Comment |

|---|---|---|

| string | length, regular expressions, format | |

| numeric | multiples, range | |

| array | additional items, tuple validation, contains, min/max, length, uniqueness | |

| object | pattern properties, unevaluatedProperties, extending closed, property names, size | |

| composition | allOf, not | Read more about validation modes. |

These properties will be not validated by the Designer, because on during scenario authoring time we work only on

Typing Information not on real value. Validation will be still done at runtime.

Validation and encoding

As we can see above on the diagram, finally preparing data (e.g. kafka sink / response sink) is divided into two parts:

- during validation on the Designer, the

Typing Informationis compared against the sink schema - encoding data at runtime based on the data type, the data is converted to the internal representation expected by the sink

Sometimes situations can happen that are not so obvious to handle, e.g. how we should pass and validate Unknown

and Union types.

type Unknown

A situation when Nussknacker can not detect type of data, it's similar to Any of the type.

type Union

A situation when the data can be any of several representation.

In the case of Union and Unknown types, the actual data type is known only at runtime - only then the decision how

to encode can be taken. Sometimes, it may happen that encoding will not be possible, due to the mismatch of the actual

data type and data type expected in the sink and the runtime error will be reported. The number of runtime encoding

errors can be reduced by applying strict schema validation rules during scenario authoring. This is the place where

validation mode comes in.

Validation modes

Validation modes determines how Nussknacker handles validation Typing Information against the sink schema during the

scenario authoring. You can set validation mode by setting Value validation mode param on sinks where raw editor is

enabled.

| Strict mode | Lax mode | Comment | |

|---|---|---|---|

| allow passing additional fields | no | yes | This option works only at Avro Schema. JSON Schema manages additional fields itself explicitly by schema property: additionalProperties. |

| require providing optional fields | yes | no | |

allow passing Unknown | no | yes | When data at runtime will not match against the sink schema, then error be reported during encoding. |

passing Union | Typing Information union has tobe the same as union schema of the sink | Any of element from Typing Informationunion should match | When data at runtime will not match against the sink schema, then error be reported during encoding. |

We leave to the user the decision of which validation mode to choose. But be aware of it, and remember it only impacts how we validate data during scenario authoring, and some errors can still occur during encoding at runtime.