How to use Nussknacker with Snowflake and Confluent Cloud

Snowflake Data Cloud Platform is a popular data platform that can be used to store and analyse your data. Fundamentally, that data is ‘at rest’. So what should you do if you want to make timely decisions based on the most recent information you have?

If you want to combine data ‘at rest’ with data ‘in motion’, one obvious piece of advice is to make the data in your lakehouse as up-to-date as possible. There are many tools, including solutions from Confluent and Snowflake, that can help you push your data streams into your lakehouse. It’s fine and in many cases sufficient, but in the end, it’s still not real-time - it’s periodically analysing data at rest, even if that data is quite up-to-date.

And what if what you need is to make decisions and take actions in real-time? You should then consider doing it the other way round: analyse your data streams, in motion, enriching them with all the knowledge you have gathered in Snowflake Data Cloud.

Nussknacker makes it easy to do just that. To show you how we will use Nu Cloud to get you through combining streaming data in Confluent Cloud with data stored in Snowflake Data Cloud.

Enrich Confluent events with Snowflake data in Nu Cloud

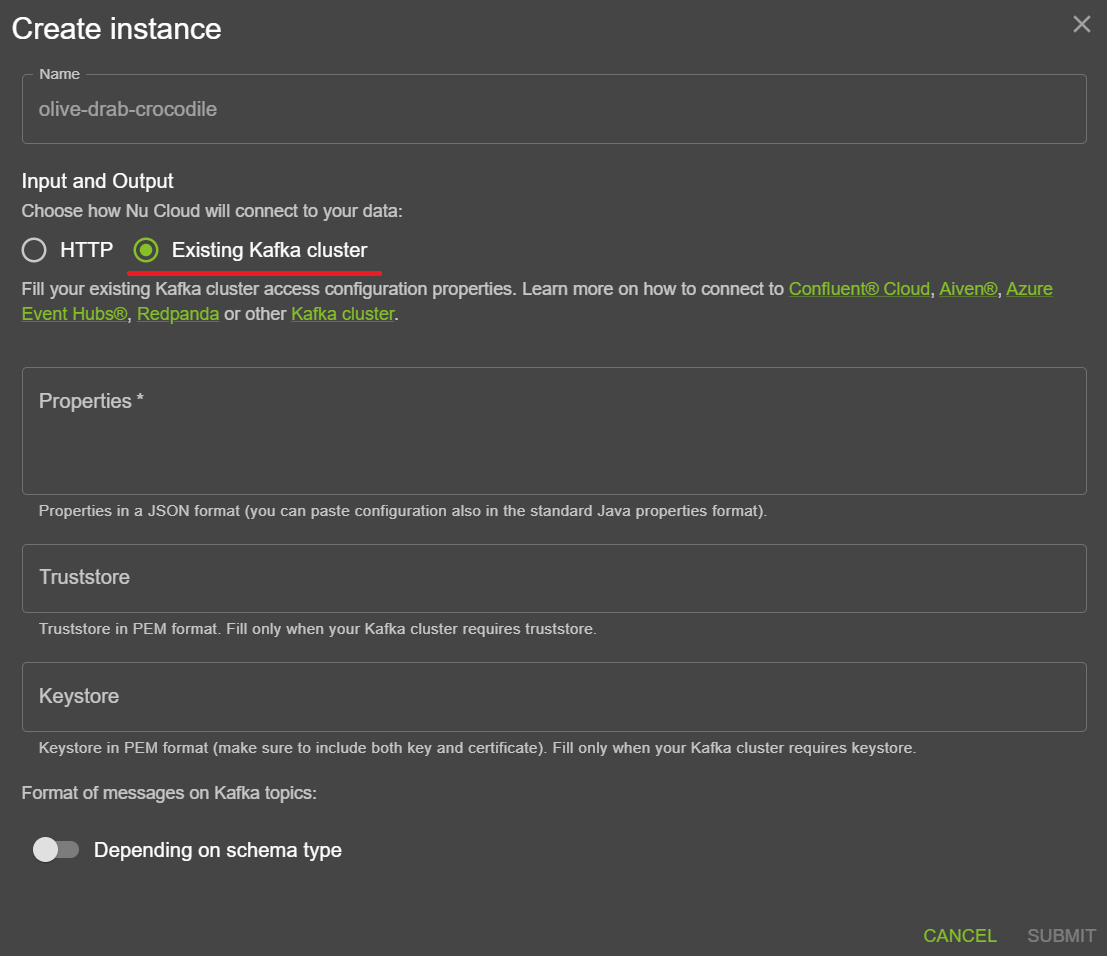

Let’s start by signing into Nussknacker Cloud and creating a Nussknacker instance. We want to connect to your streams in Confluent Cloud, so it should be an instance with an existing Kafka cluster.

For more details check Connecting to Apache Kafka® in Confluent® Cloud.

Once the instance is ready, stop it and click EDIT to change its configuration. At the bottom of the screen choose the Snowflake tile. An enricher parameters screen will open.

The first parameter, component name, is just the name that helps you find the enricher in the components list when editing a Nussknacker scenario. It can be anything you want, but it’s best to keep it short.

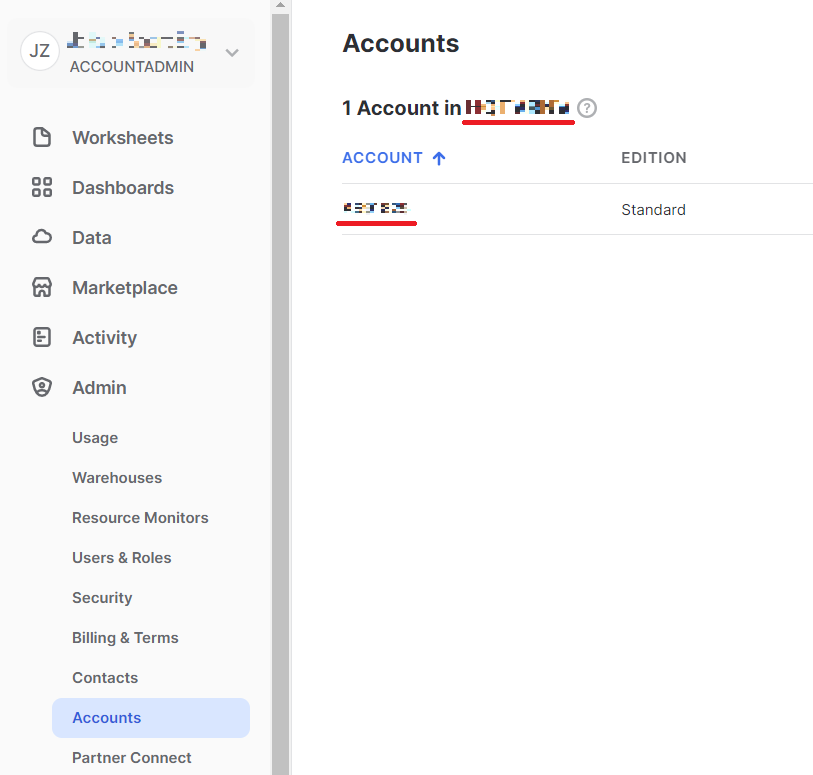

The rest of the parameters are from your Snowflake account. Go to Admin | Accounts to find your organisation name and account name:

Then go to Admin | Users & Roles, create a new, read-only user, and use its credentials (username and password) in the enricher configuration.

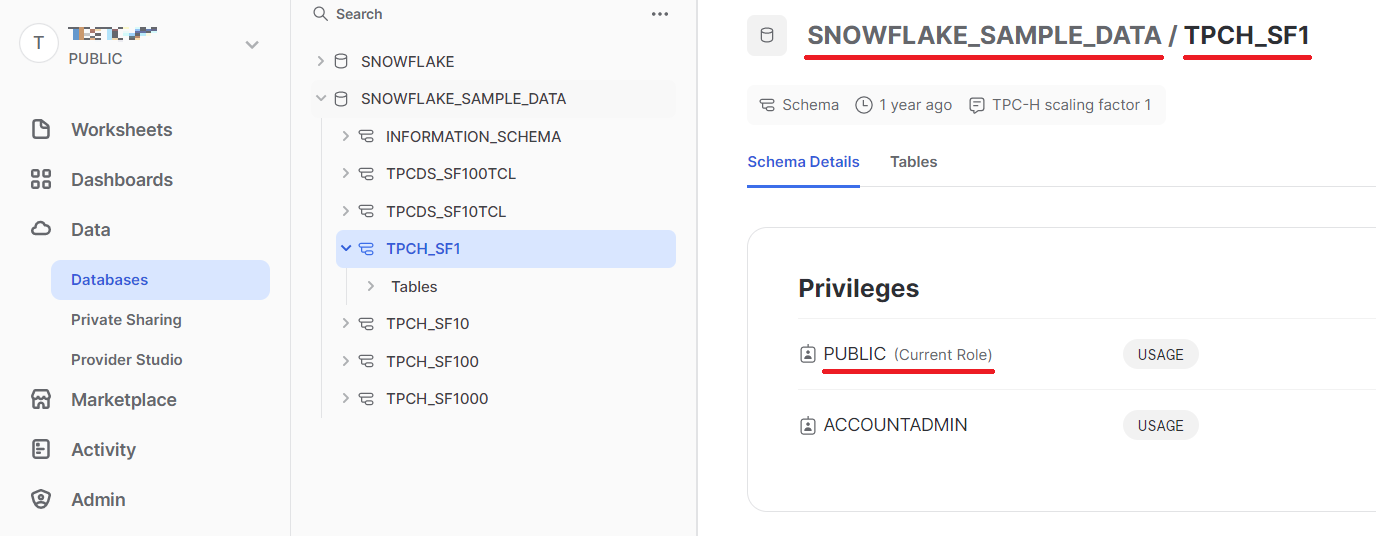

To find the last three parameters, database, schema, and role, go to Data | Databases:

Once you filled in all the parameters click SUBMIT, SAVE CHANGES and then start your Nussknacker instance again.

To start working with your data streams, you choose source and sink topics in your scenarios as with any Kafka you connect Nussknacker to (you can learn more here: Streaming specific components).

Enriching that data is equally straightforward. Snowflake enrichers we’ve just configured work exactly the same as regular Nussknacker SQL enrichers. There are two variants: a simpler lookup enricher and a more versatile query enricher. You can learn more about them here: SQL enricher.

Combine behaviour with prior knowledge

If you wonder why you would need all the data from your Snowflake Data Cloud when processing your data streams, think for example about your customers’ behaviours and all the prior, ‘static’ knowledge you have about them.

The power of Snowflake is that you can build customer profiles with a 360° view. All the relevant information about your customers in one place. The power of Kafka and Confluent Cloud is that it allows you to trace your customer behaviours in real-time.

They are great tools on their own, but you get the most value if you use them together - react to current behaviour using all the prior knowledge. And Nussknacker not only makes it possible, but it makes it possible for non-developers, shortening the path from an idea to actually taking actions based on data in motion together with data at rest.