Nussknacker is a powerful open-source tool designed for real-time decision-making and event stream processing. It comes equipped with predefined processing modes—Streaming is perfect for continuous data flow, Batch for sequentially processing segments of data, while Request-Response is ideal for scenarios where data needs to be processed on-demand.

One notable implementation of Nussknacker's Request-Response (RR) mode is in a major Polish telecommunications company. We assisted them in adapting the standard Request-Response mode to meet their specific domain needs, which gave the Nussknacker RR mode a whole new flavour.

Getting Started with Nussknacker

If you are familiar with Nussknacker your might know that setting up a standard Request-Response scenario in Nussknacker is straightforward and involves few basic steps:

- Create a new scenario.

- Build the logic using nodes.

- Deploy the scenario.

From this point on, you can benefit from your deployed scenario. As your automation needs to expand, so will your scenario—potentially becoming too complex to manage in one place. While parts of the algorithm can be broken down into smaller, reusable fragments, we reached a stage with our client where a more custom and sophisticated solution was necessary. To illustrate this without revealing any confidential details, let's use the example of a legitimate business from Scranton, Pennsylvania.

Nussknacker in a Dunder Mifflin-like company

Imagine working at Dunder Mifflin🧑🏻💼, a paper selling company that is moving its customer services online (thanks to new, young, executive staff). The company's goal is to display comprehensive client information and recommendations on-screen when clients call. Previously, this information was stored in your notepad or in your memory. Now, you have access to various data sources, such as:

- Orders: Information about recent orders and their amounts.

- User Needs: Customer requirements, monthly paper usage, and special requests.

- Shipping: Shipping events for specific customers.

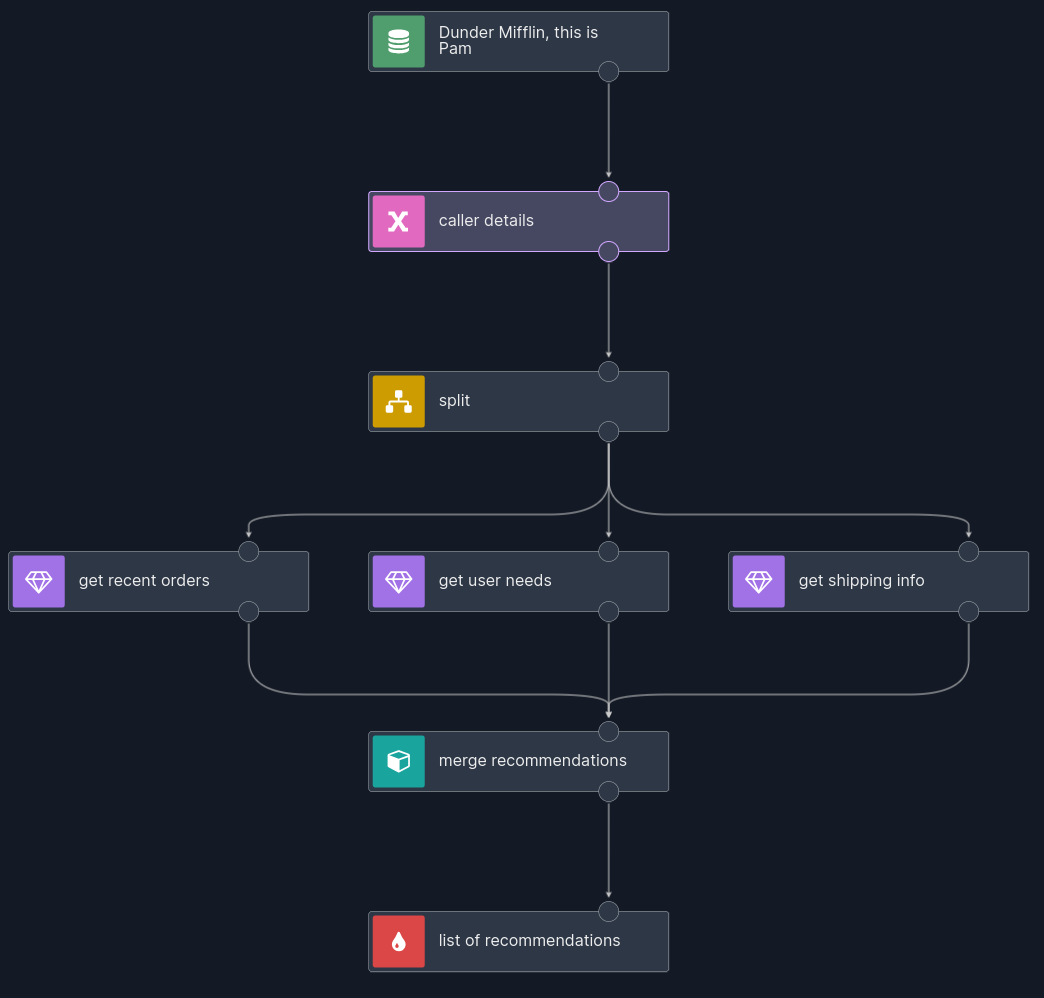

A straightforward implementation would be to create a 'recommendations' scenario, where you can request data by posting a client number to fetch information from these services in parallel. Such as:

However, the raw data from these services might be too complex for quick interpretation during a call. Imagine the raw output from a scenario that aggregates responses from several services in JSON format.

We could attempt to improve the appearance on the frontend, but that's not our preferred approach. Our goal is to deliver ready-to-display information to the frontend, rather than requiring additional processing by the frontend itself.

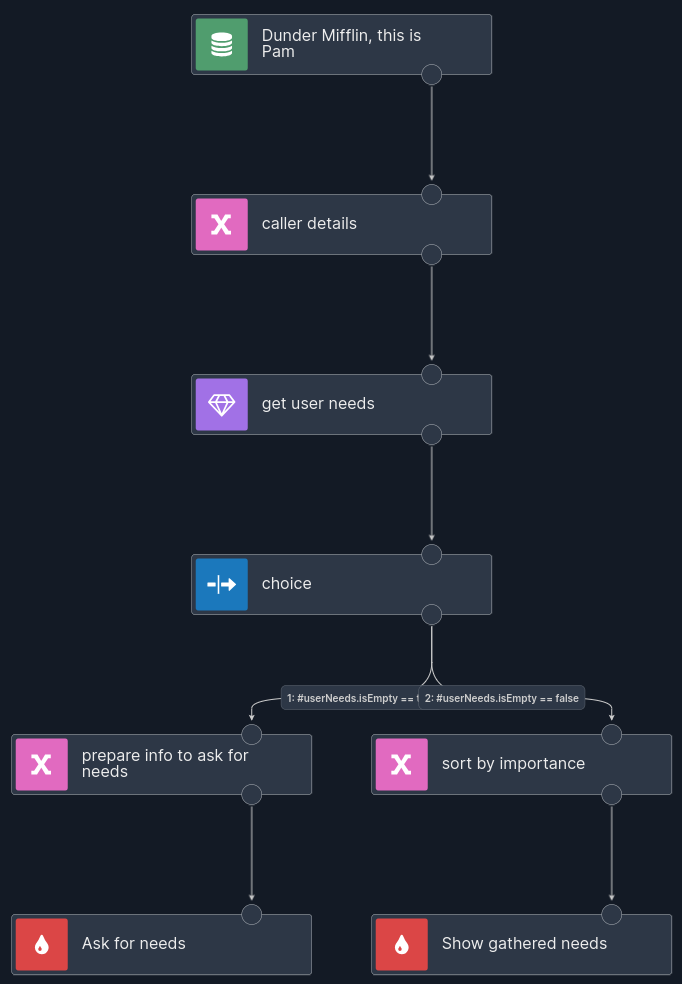

To enhance the usability of the scenario we have created, we need a tailored approach. For instance:

- In the "get shipping" section, only display shipping issues and the latest successful shipment. Mark somehow problems that client was informed about.

- In "get recent orders" show average amounts per paper type

- In "get user Needs" ask for specific needs if none are recorded.

Now the second iteration of scenario for paper-business company looks something like:

It becomes a bit more complicated, but it's manageable. Now, consider introducing a new services into the mix:

- Truck Position: Provides information about the truck heading to the client, or nothing if no shipment is in progress.

- Batch issues number: Reports known batch issues, potentially involving low-quality products received by the client.

- Upsell ML: Suggests products of potential interest to the client based on their order history, utilising some smart ML model.

- Open complaint: Displays details about ongoing client complaints and their current status.

And any additional service can be included here as well. If you're familiar with the range of data available today, you understand that the number of services could reach dozens or even hundreds. A single scenario approach for user information would no longer be right fit in such a scenario.

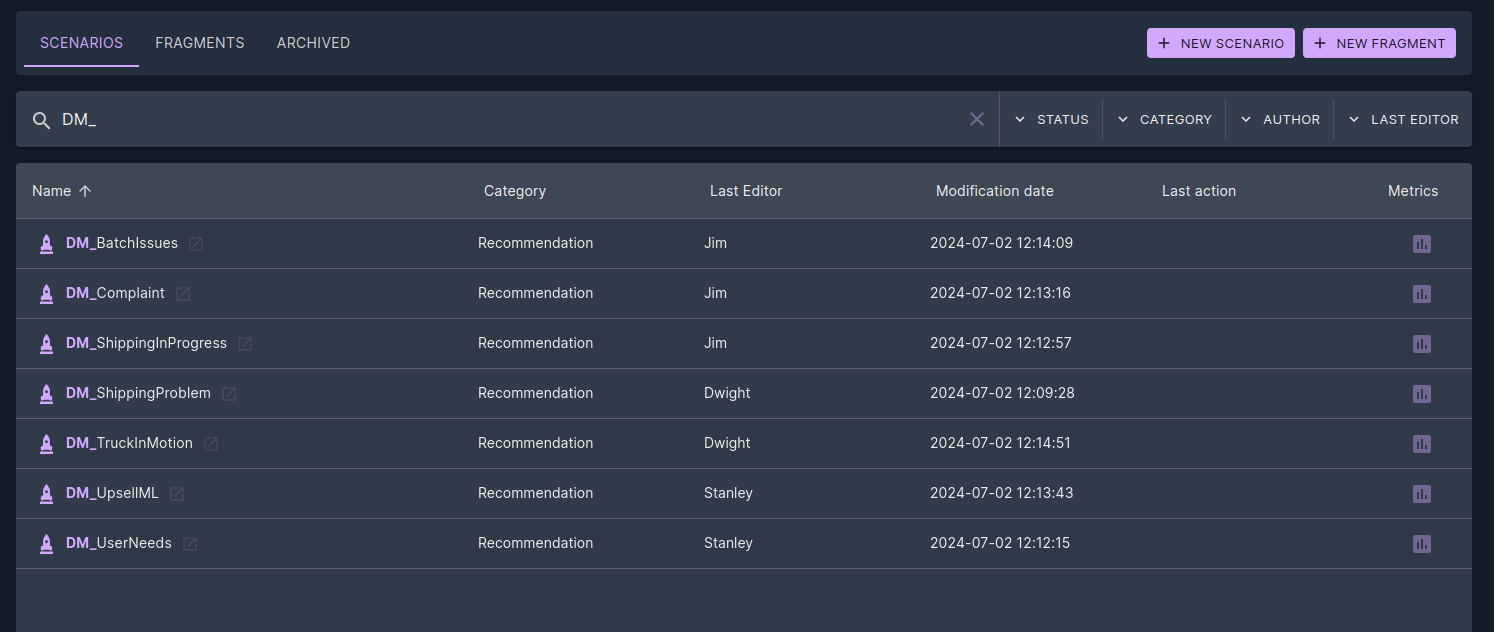

We could separate the logic for each piece of user information into individual scenarios, and this could work for some time. However, this would require calling each scenario separately. Even if this isn't an issue, adding a new scenario for additional service or user information would require updating the list of requests. If we decide to eliminate a scenario that no longer serves a purpose, we must manually remove it from the list, which can lead to errors. Moreover, managing these scenarios manually would be challenging for a team working simultaneously on the project.

Making recommendations easy to read, edit and extend

To address such complexities, an extra layer of abstraction can be created by wrapping Nussknacker inside a Spring application. This customization involves:

- A new category, "recommendations."

- Consistent source and sink schemas for all scenarios from this category

- Unified endpoint: all scenarios available under "/recommendations."

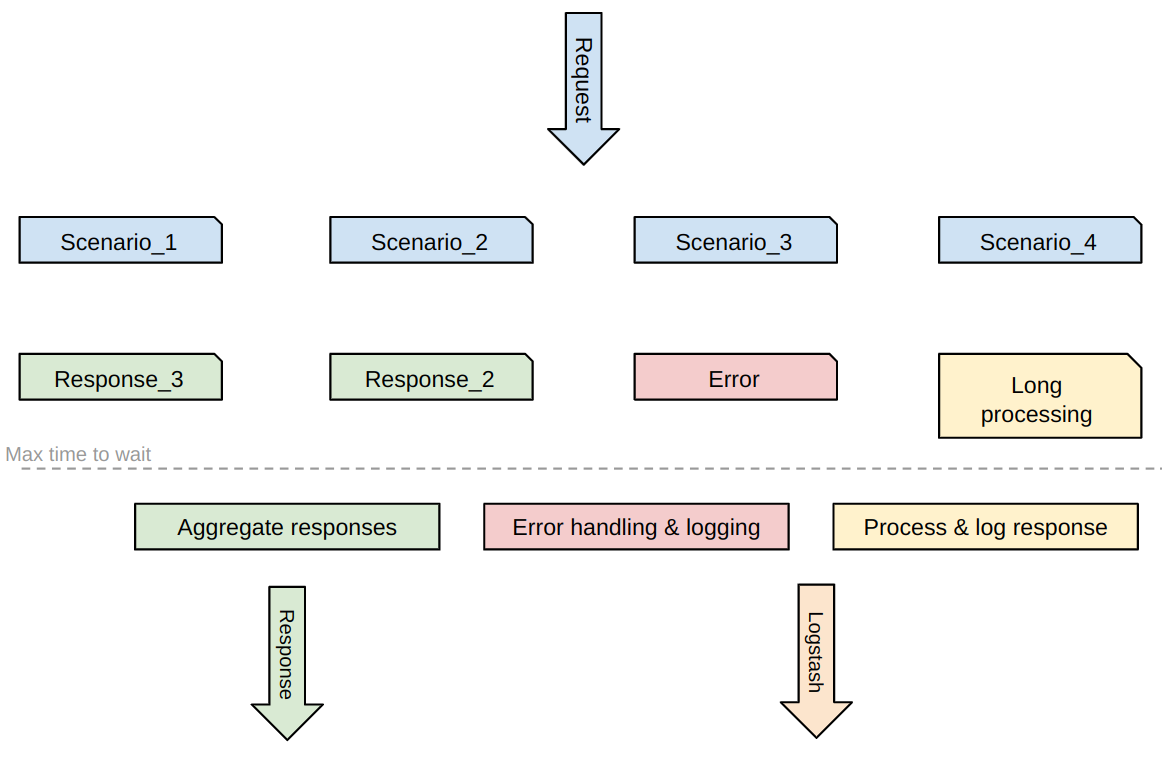

When "/recommendations" is called, all deployed scenarios execute in parallel, and their responses are aggregated into a list of recommendations.

This approach simplifies scenario management, making them easier to read, edit, and extend. Sales personnel or business specialists can efficiently handle and update scenarios, ensuring that customer service remains top-notch.

Furthermore, navigating through the recommendation scenarios is also straightforward, as all scenarios are conveniently located in the Scenarios window under the same category. Adding a new scenario or modifying an existing one is straightforward and can be done by a team of people at once.

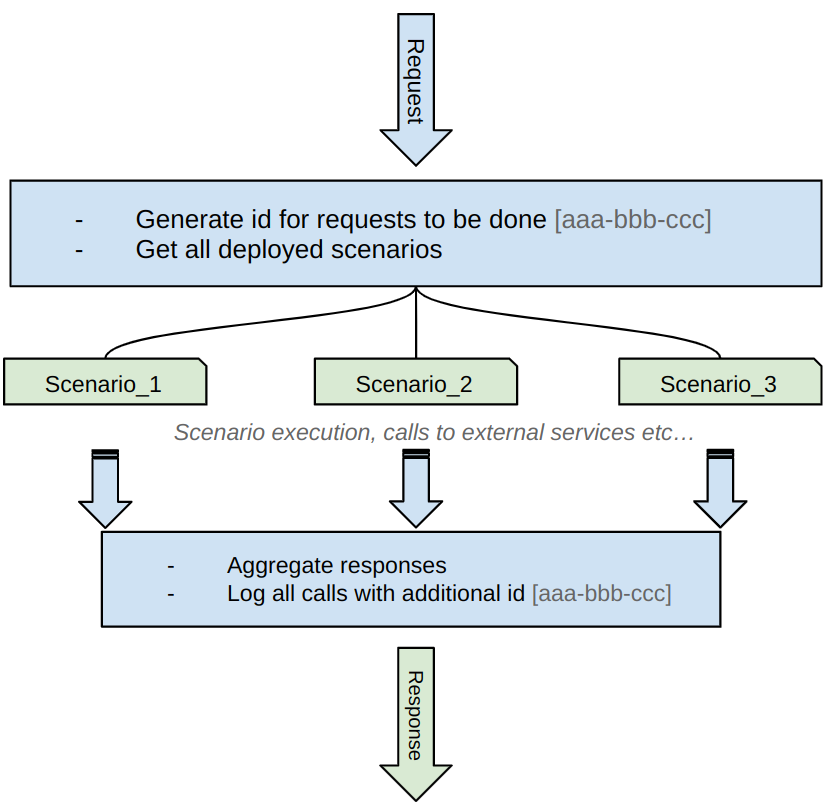

Technical insights: flexibility and customisation

Behind the scenes, we've made some improvements to enhance the overall experience. For instance, when multiple scenarios are running simultaneously, each sending requests to external services, debugging and error tracing could be challenging. To tackle this, we've introduced custom correlation IDs. These unique IDs are assigned to each request and logged with other details, allowing us to track requests across all scenarios and nodes in real-time.

These enhancements are made possible by extending Nussknacker's request-response API. By customising sources through extensions like RequestResponsePostSource, we gain flexibility in how requests are handled— from transforming and parsing requests to generating custom APIs.

In our implementation, we've also added timeouts for scenarios. If an external service takes longer than expected to respond, the scenario returns whatever data it has collected within a set time frame. Meanwhile, scenarios that exceed the timeout continue to run in the background, logged for future review.

Using custom Nussknacker categories gives us more control over scenarios. For example, we can include technical nodes within scenarios to monitor flow without affecting end users. The generic nature of RequestResponseScenarioInterpreter allows us to choose what information to include in scenario responses—whether it's actionable data for users or technical insights for analysis.

Nussknacker works great out of the box - but it is more than just an application. If you are a system architect / programmer or project manager, the value of open-source code is clear to you, especially when designed for robust systems, extensibility or even experimentation - like using Nussknacker to develop StarCraft tactics to play against other players (yeah, we’ve done that).

Whether you're exploring Nussknacker for fun or integrating it into existing architecture, it offers solid performance and easy customization. Just like in the Dunder Mifflin example, you can tailor it to fit your needs.