Why native image?

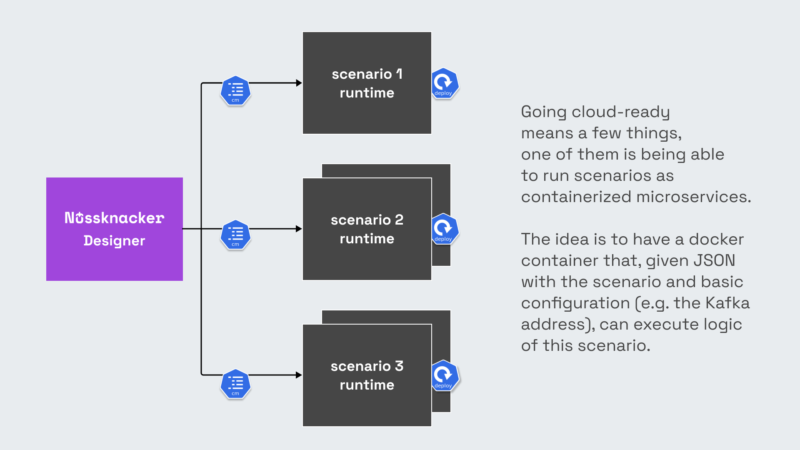

For some time we’ve been working on making Nussknacker more cloud-ready. This means a few things, one of them is being able to run scenarios as containerized microservices.

The idea is to have a docker container that, given JSON with the scenario and basic configuration (e.g. the Kafka address), can execute logic of this scenario.

Now, given that our largest deployment spans more than 100 running scenarios, the natural question is - how to make the scenario container as small as possible?

This applies to both sheer image size (which is > 400MB at the moment) and (what’s even more important) to the amount of used memory, startup time and so on.

Of course, the first answer is - let’s rewrite Nussknacker Runtime to Go, Rust or whatever system language you prefer. This is indeed an interesting idea, I hope we’ll go back to it later 😉

However, it turns out we can improve things quite a lot while staying in our JVM comfort zone.

I decided to spend some time during our TouK initiatives - hackathon and flash talk preparation to figure out if GraalVM can be the answer.

GraalVM - basics

To begin, let’s look at what GraalVM is. According to the official site, it’s “a high-performance JDK distribution”. Sounds good, but what does this really mean? If you look under the hood, you’ll notice three major components:

- Brand new JIT compiler.

- Polyglot embeddings framework, named Truffle.

- Ability to generate native images.

While the first two are certainly exciting, we’ll leave them for the next time and we’ll focus on native images.

The idea is to be able to generate a single binary (instead of a bunch of jars), which can run without having to install JRE or JDK. Instead, the binary (similar to e.g. Go) will contain all the necessary JVM stuff, like the garbage collector, thread implementations, and so on.

On GraalVM this JVM is named Substrate. Of course, it’s a bit limited compared to the standard Hotspot JVM - e.g. in the “community” edition the only available garbage collector is the Parallel GC - but for smaller services, it actually makes sense to use it.

AOT compilation

So, how does this magic dust actually work? The key concept is the Ahead of Time (AOT) compilation - used instead of the JIT (Just in Time) compiler used in Hotspot. GraalVM compiler analyses the code, starting from the application entrypoint (i.e. main-class). During this process, it finds all the code that can be invoked and only that is compiled to binary - resulting in a smaller image. This is somewhat similar to pruning code in modern JS applications.

Of course, AOT compilation has some significant limitations, which are results of its much more static nature. The most significant ones, from Nussknacker POV are:

- Cannot add jars in runtime - this means we cannot add plugins without regenerating the image

- Limited use of reflection. You cannot just call `Class.forName` and expect it to work. All the classes that you’ll interact with via Reflection API have to be declared during the compilation. This makes configuration a bit cumbersome - we’ll get to that in a minute.

- Cannot generate bytecode - well, this one is rather obvious - we don’t use bytecode at all at runtime!

How to use it in practice?

Ok, so we know how native image generation works in theory. Next question - what changes do we need to make Nussknacker work? After all, this is no pet project…

The first thing is configuring the build tool. As usually in the Scala ecosystem, there is more than one plugin to do it… We chose the native-packager, since we already use it for Docker images. The configuration itself is quite simple:

enablePlugins(SbtNativePackager,JavaServerAppPackaging,GraalVMNativeImagePlugin).

settings(

containerBuildImage := GraalVMNativeImagePlugin.generateContainerBuildImage("ghcr.io/graalvm/graalvm-ce:java11-21.2.0").value,

graalVMNativeImageOptions := List(

"--report-unsupported-elements-at-runtime",

"--no-fallback",

"--enable-url-protocols=http",

"--initialize-at-build-time=scala",

"--initialize-at-run-time=org.slf4j.LoggerFactory,io.netty",

"-H:ResourceConfigurationFiles=/opt/graalvm/stage/resources/resource-config.json",

"-H:ReflectionConfigurationFiles=/opt/graalvm/stage/resources/reflect-config.json",

"-H:+StaticExecutableWithDynamicLibC"

),

)

Let’s dig into the details a bit. It turns out that the best way to generate native image is to do it in docker container:

The reason is quite simple - since we’re generating machine code, it’s easier to create a reproducible build this way, without reliance on the developer's machine.

Next, we have to pass some more or less standard options:

--report-unsupported-elements-at-runtime

--no-fallback

--enable-url-protocols=http

-H:+StaticExecutableWithDynamicLibC

Some of them are a bit surprising - why aren't http or exit handlers (reacting to signals correctly) enabled by default, but fortunately documentation is quite comprehensive.

Only the last configuration option demands some comment. Native image doesn’t need a JVM installed, but system libraries are still needed. It turns out that it’s quite easy to generate an “mostly static” image - containing everything except libc. Embedding libc inside the image is still possible, but not so easy.

Now, the trickier part, the initialization configuration:

--initialize-at-build-time=scala

--initialize-at-run-time=org.slf4j.LoggerFactory,io.netty

GraalVM tries to initialize classes during compile time, which is not always possible or desirable - especially if the initialization involves reading config files etc. - which is typically the case of logging framework.

The most difficult part is the reflection configuration though:

-H:ResourceConfigurationFiles=/opt/graalvm/stage/resources/resource-config.json

-H:ReflectionConfigurationFiles=/opt/graalvm/stage/resources/reflect-config.json

The content of reflect-config.json looks like this:

[

{

"allPublicFields":true,

"allPublicMethods":true

}

]

The file should contain all the classes and methods that will be called via reflection. Of course, it would be possible to create this configuration via a painful try&error approach but for large code bases - like Nussknacker - this approach is not viable.

Fortunately, GraalVM creators prepared a small Java agent, which can help a lot. The idea is to run the application on “standard” JVM with agent enabled, which will collect all invocations of the reflection API and generate appropriate configuration entries.

-agentlib:native-image-agent=config-output-dir=/tmp/graal

Of course, it assumes that all code paths will be executed during “test run” - which can be a bit problematic in case of more complex applications. For our experiment it wasn’t difficult - we just ran a sample scenario with some data.

After configuring plugin, we can generate the image:

sbt liteGraalVM/graalvm-native-image:packageBin

And build the Docker image with simple Dockerfile (we chose distroless image as it contains glibc, while alpine image contains musl as libc implementation, which doesn’t work out of the box) :

ADD ./target/nussknacker-lite-kafka-graalvm /nussknacker-lite-kafka-graalvm

ENTRYPOINT ["/nussknacker-lite-kafka-graalvm"]

Problems along the way

Of course, we encountered a few bumps along the way… I collected them in a separate section, as I hope they will disappear sooner (hopefully) or later:

- We couldn’t make Scala TypeTags work. They are not so important in NU, so for PoC we decided to just remove them and replace them with simpler code, especially as they seem to be unavailable in Scala 3.

- https://github.com/oracle/graal/issues/3977 - latest (21.3.0) GraalVM caused strange errors, so we downgraded to 21.2.0 which works just fine

- We tried static image generation, but it was a bit more difficult than expected:

- https://www.graalvm.org/reference-manual/native-image/StaticImages/ - one has to compile musl-libc (which is libc implementation included in a static image)

- https://github.com/oracle/graal/issues/4076 - seems like the latest version of musl is not always the best choice…

Final results

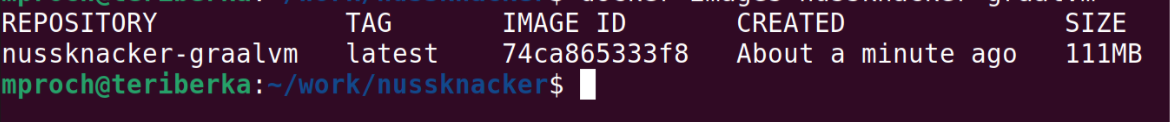

Now, let’s see what we achieved. First, in terms of image size:

The difference with the standard openjdk-slim build is visible!

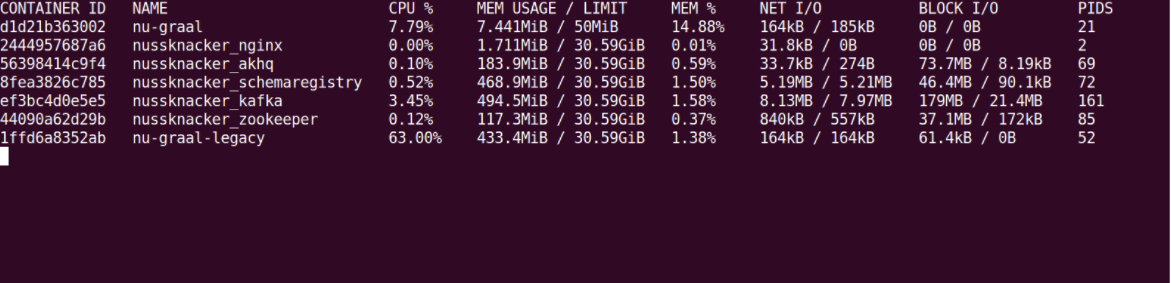

What about runtime?

Of course, the comparison above is a bit biased, as we didn’t do anything to tame memory usage in regular JDK, but still - I find <10MB memory usage of GraalVM as quite impressive. Later, we tried to reduce the memory footprint on regular JDK, but the best we could achieve was around 256MB - mainly because of high MetaSpace usage.

To wrap up: I think that GraalVM native image is quite impressive. We managed to run a quite complex application, which was not created with GraalVM in mind, without too many changes in the code. The resulting image and memory usage are really small, which makes running each Nussknacker scenario in a separate container viable.

However, the toolchain is not there yet when it comes to stability and ease of use. Some of the problems can be fixed with better documentation or default settings. One thing that looks a bit hacky is the idea to run the application on “normal” JDK to generate reflection config and only afterwards generate the native image.

If you want to give Nussknacker on GraalVM a ride, or look at the details, the code is here:

https://github.com/mproch/nussknacker/tree/graalvm,

with some testing scripts: https://github.com/mproch/nussknacker/tree/graalvm/engine/lite/kafka/graalvm/run.

Be aware it’s only a POC at the moment, though 😀