As mentioned in our previous post, while working on Nussknacker we’ve come to understand that a lot of real-life use cases do not require complex stateful data stream computations. Fortunately, Nussknacker was designed to be able to run multiple execution engines. In this post, we’ll glance at what is possible in Nu 1.3.

Let’s look at a Nussknacker deployment. It consists of two main components

- Designer - a GUI for the creation of scenarios

- Execution engine - responsible for interpretation and execution of a scenario

The Designer application stays the same, but it can integrate with various execution engines with pluggable DeploymentManager API. This approach allows us to provide a tailored solution for a specific use case while maintaining a consistent scenario authoring experience across all execution engines.

Let’s see what execution engines are provided by Nussknacker out of the box (for some of our customers we provided custom implementations to suit their needs).

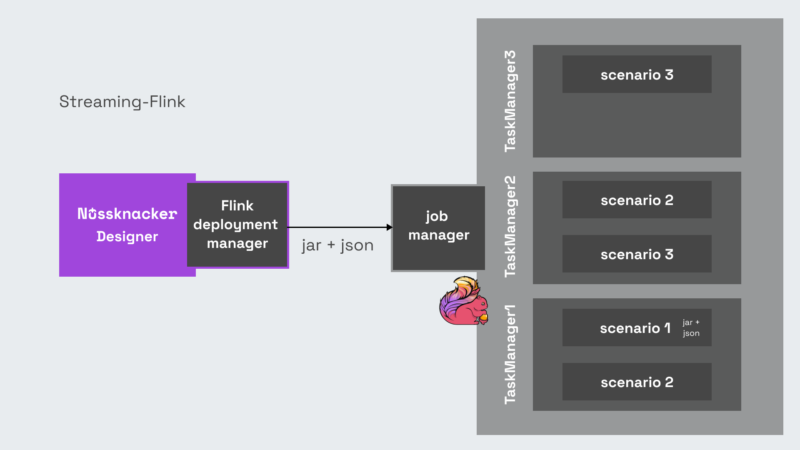

First comes the “streaming” deployment manager. This is the “classic” one, which uses Flink as an execution engine to achieve scalability and handle large scale stateful processing, exactly-once processing guarantees etc.

In this mode, each deployed scenario gives rise to a Flink job. Read more about Flink Architecture.

Of course, all the benefits of using Flink come with a cost - in our case, this cost is: steep learning curve, operating Flink cluster (which is a non-trivial piece of software as it includes a cluster, scheduler etc.!), and so on.

Now, chances are that you are already using a container’s scheduler - that is, Kubernetes. Wouldn’t it be great if we could just use it to deploy our business logic? You want to handle events from Kafka, enrich them with data from external systems, and possibly score some ML models?

Here comes the Streaming Lite execution engine for Nussknacker.

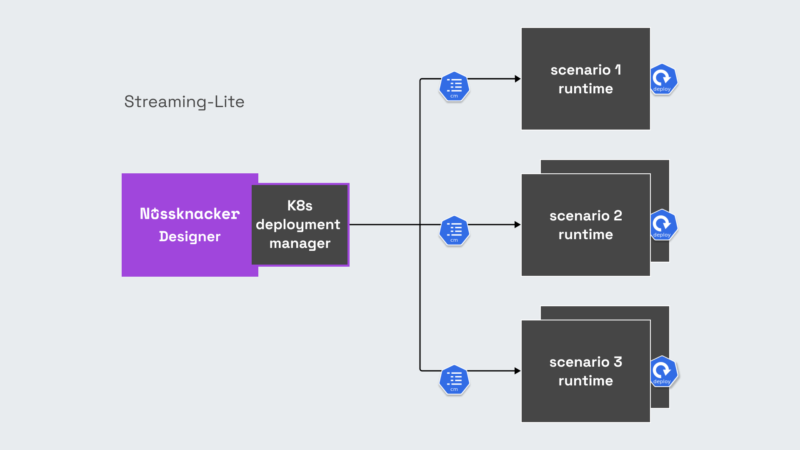

Each Streaming-Lite scenario deployment creates a K8s deployment, running scenarios as separate services. The pods are based on Nu Runtime image which contains code that can interpret a scenario definition provided by the designer and execute it as a microservice.

Such an approach allows us to take advantage of K8s features such as scaling, resource management etc. The exact details can be modified through deployment manager configuration. We also rely on key Kafka benefits - offset management, consumer balancing, transactions for exactly-once processing, and so on.

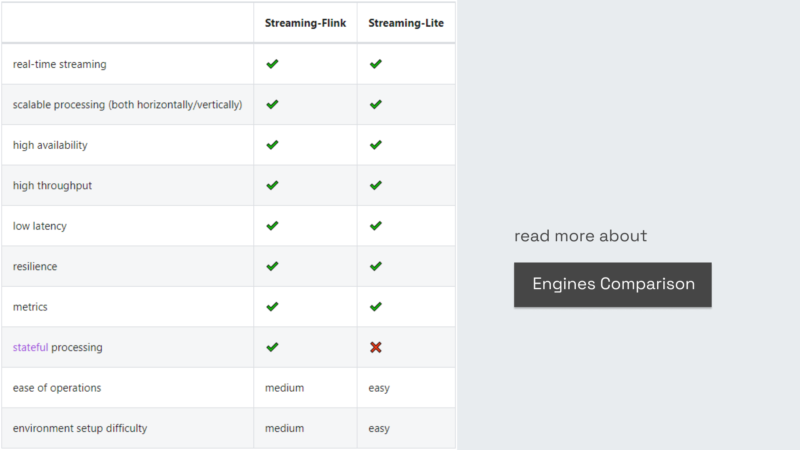

The drawback of Streaming-Lite is the lack of stateful components. However, the benefits are significant: the main one is simpler architecture, with components that are based on current standards. This leads to much simpler operations - this is why we’re planning to provide Streaming-Lite as the engine of NU Cloud (it’s coming very soon, if you want to know more, write to info@nussknacker.io).

If you’re still not sure which version to choose, have a look at the comparison in the docs

Now, you may think: I can choose different engines - the Flink-based or the Streaming-Lite one, but still - I need to use a streaming approach. And what if I don’t need Kafka, if I just want to invoke a simple REST service implementing my decision logic - with additional enrichments, possibly ML models scoring, etc.?

Nussknacker can be helpful also in these situations. We’re working with a few customers on an engine that can handle such cases - if you’re interested, we’d love to hear from you: info@nussknacker.io