Integrating machine learning models into business applications

Developing and integrating machine learning models into business applications can often feel like navigating a maze. The process is fraught with challenges – from training a model using a vast range of ML technologies to successfully deploying the model in a production environment and integrating the model into a business application. On top of that, you need to adapt to ever-changing business needs, which requires you to refine the models, deploy new versions, and integrate these new versions into the application.

In this blog post, we will show you a complete process from training a model to inferring it in business logic and later refining the model. We believe that ML models inference can be much simpler.

How a data scientist develops a ML model

The development of a machine learning model is typically carried out in an interactive environment, such as a Jupyter Notebook. This environment provides an instant feedback loop and allows you to visualize results from small pieces of Python code and enables you to tinker with the dataset and continuously refine the model. Furthermore, Jupyter Notebooks are usually served remotely, which allows you to continue the work the next day. This also makes it easier to execute time-consuming model training on a remote server.

Machine learning experiment tracking

Experiment tracking is an essential part of machine learning. As data scientists refine their models, they generate a multitude of experiments, each with its own set of parameters, data transformations and metrics, such as model accuracy. Keeping track of these experiments manually can be cumbersome. This is where experiment tracking tools like MLflow can be invaluable.

However, MLflow offers more than just tracking experiments; it also provides a repository for storing ML models. To draw an analogy, think of an ML models registry as a schema registry. While the schema registry maintains data schemas (e.g., Avro or JSON), the model registry maintains the versions of machine learning models and models metadata, such as definitions of input and output parameters.

Preparing an initial ML model

We will use a credit card fraud detector ML model as an example. Our objective is to block fraudulent credit card transactions. Imagine the transactions are sent in real time to the Kafka topic. While we could author a "classical" Nussknacker scenario for that task, identifying illegitimate transactions with decision rules is not a straightforward process. Therefore, we need the expertise of a data scientist to train a machine learning model for us.

To demonstrate how to do this, let's examine the Jupyter notebook. Click👉 here to open it.

MLflow demo

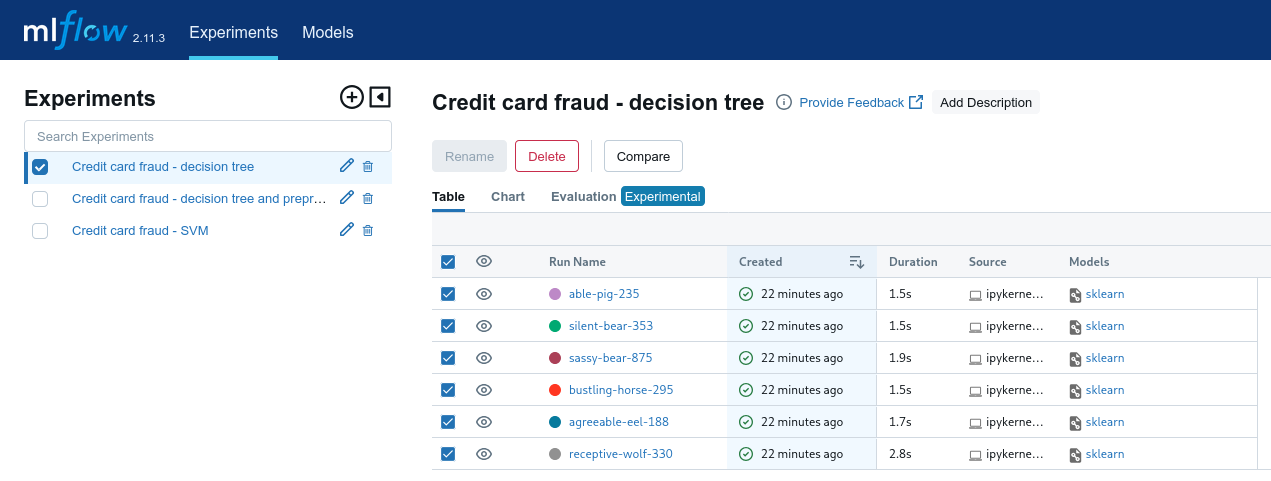

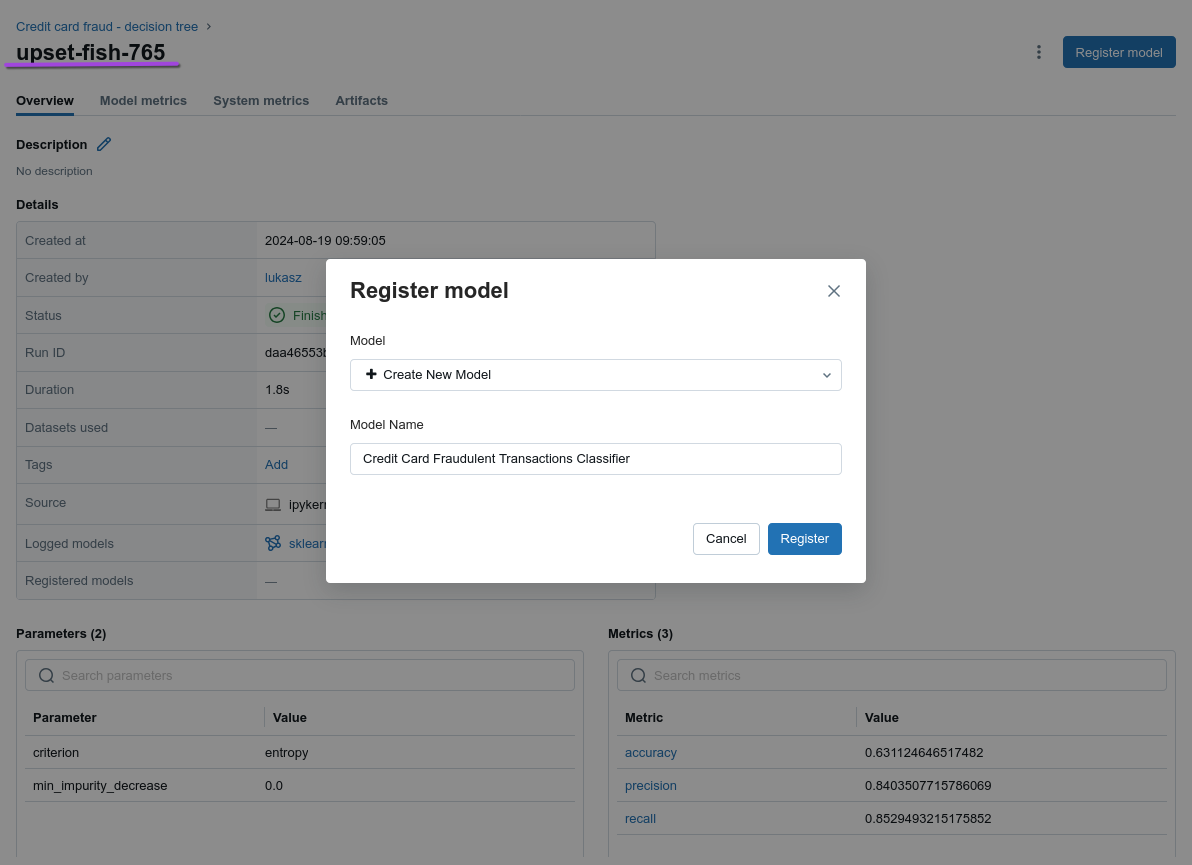

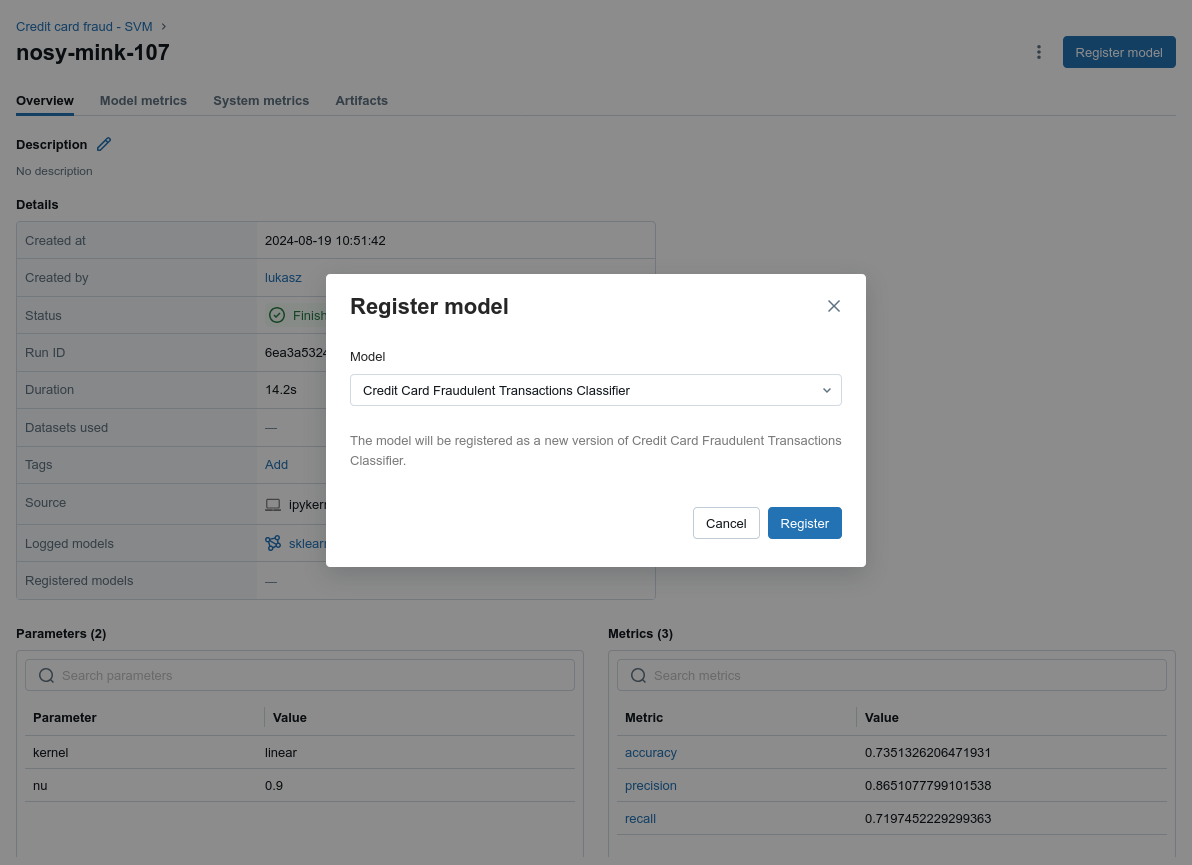

Once the experiment is recorded in MLflow, it’s time to register a new model for the best set of parameters. In MLflow this is called a run. Below are some visualizations and steps that show how MLflow facilitates model comparison and registration.

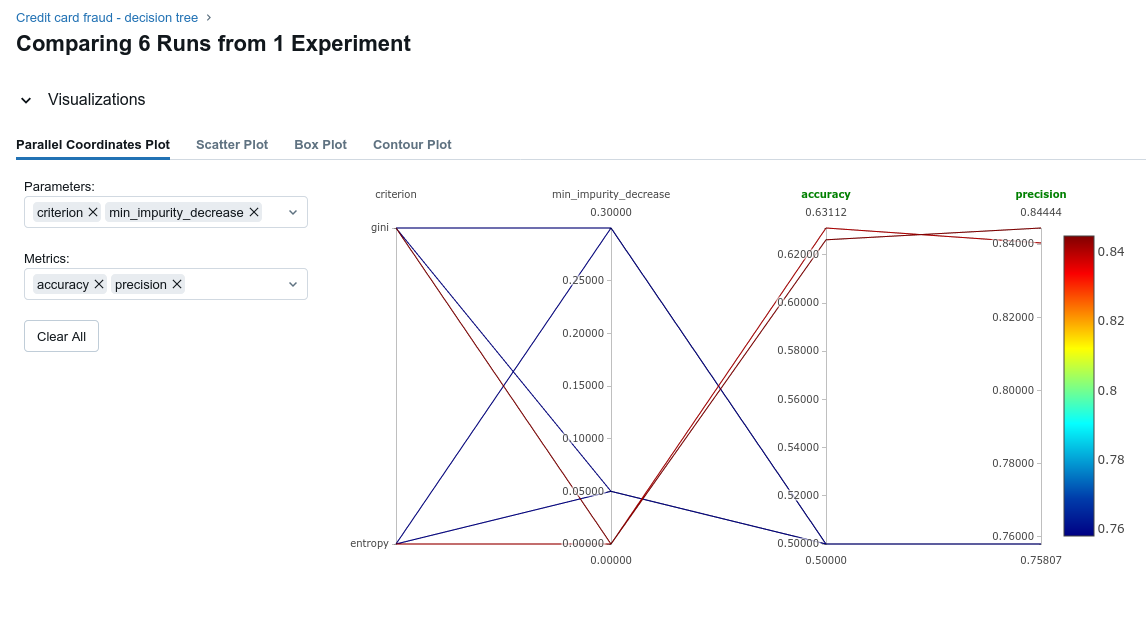

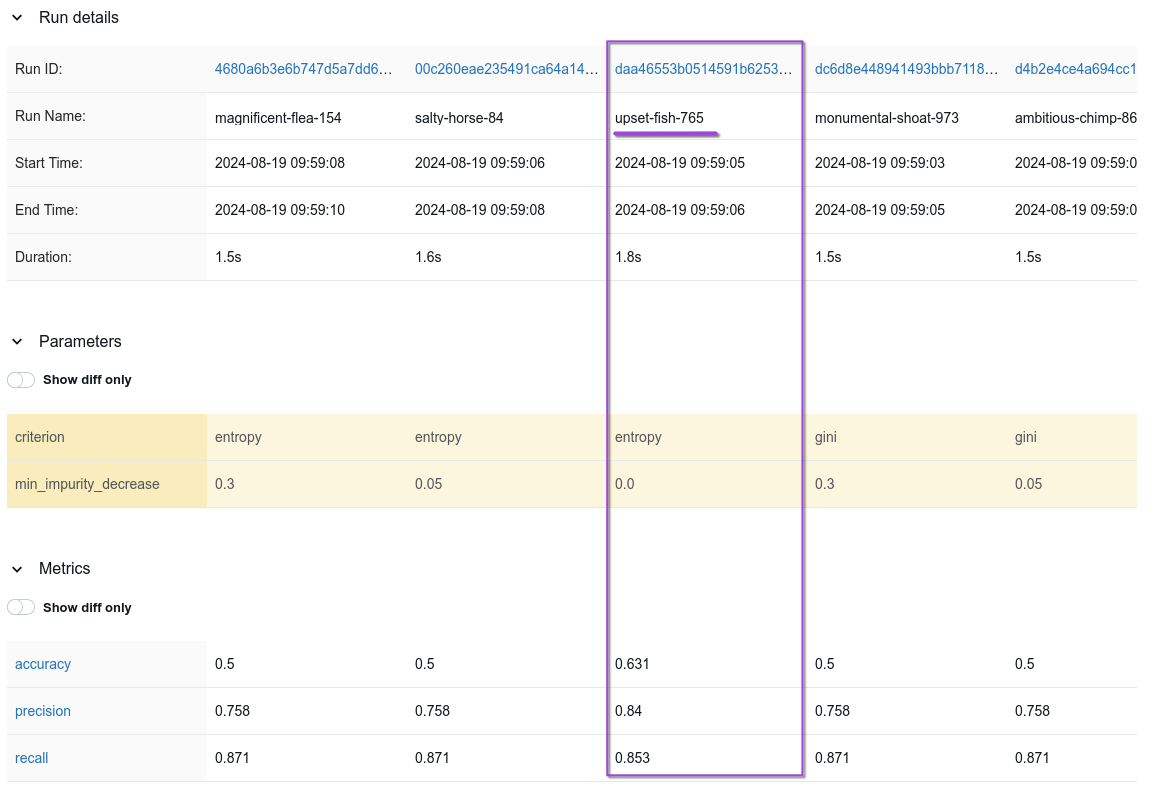

First of all, you need to go to Experiments and find your newly created experiment. We also select all runs within the experiment to be able to compare the performance metrics of each trained model. MLflow allows parameters to be plotted against metrics to help select the best run. As you can see, we have selected the run with the highest accuracy for the parameters criterion=entropy and min_impurity_decrease=0.0 and registered it as an MLflow model.

MLflow allows parameters to be plotted against metrics to help select the best run. As you can see, we have selected the run with the highest accuracy for the parameters criterion=entropy and min_impurity_decrease=0.0 and registered it as an MLflow model.

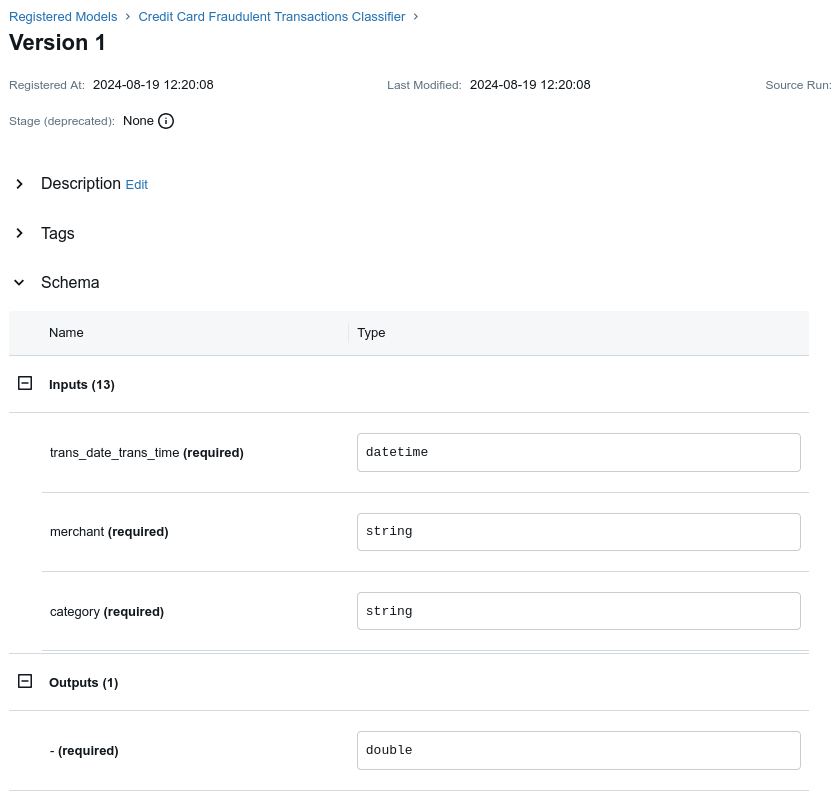

Registering a model is really easy. When the new model is registered, we can click on the first version and see its metadata, such as input and output vectors.

Registering a model is really easy. When the new model is registered, we can click on the first version and see its metadata, such as input and output vectors.

MLflow enricher in a fraud detection scenario

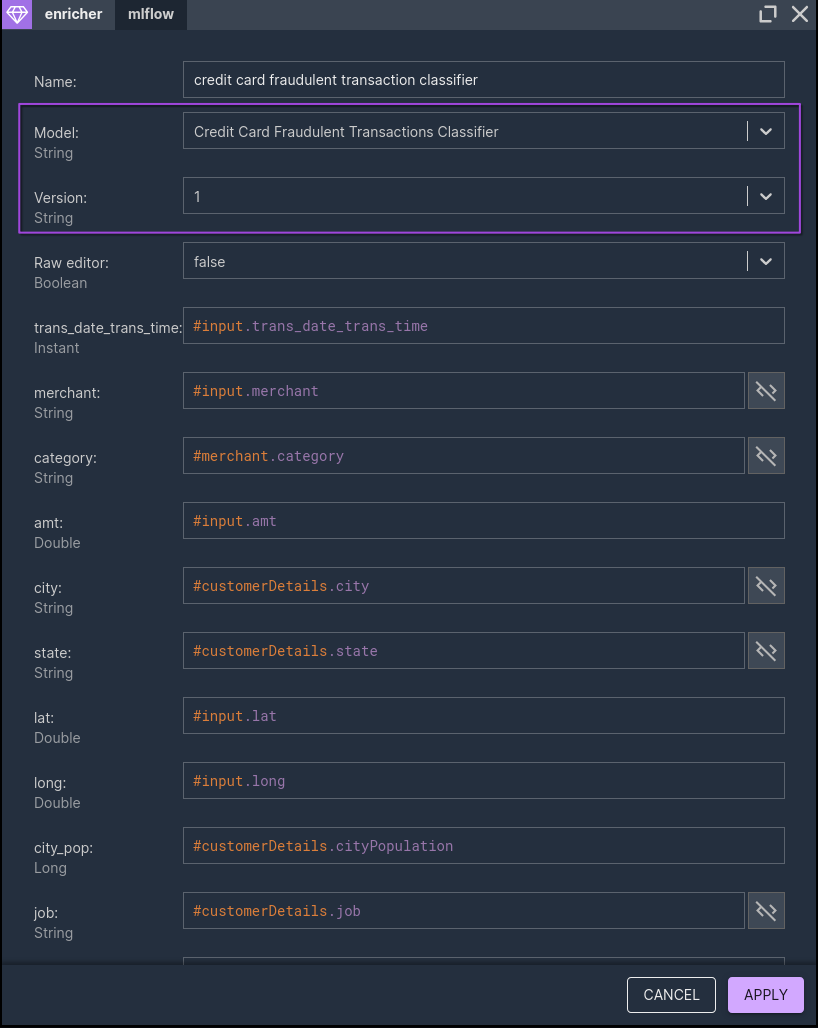

Once we have the ML model registered in the MLflow model registry, we proceed with the creation of the Nussknacker scenario. Nussknacker’s MLflow enricher discovers available models, their versions and input and output parameters by fetching various metadata from MLflow models registry.

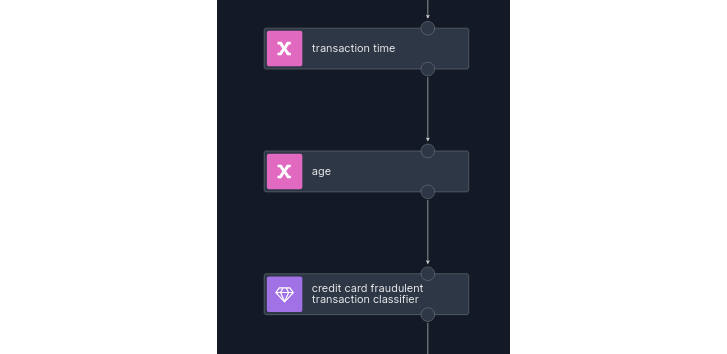

The final Nussknacker scenario looks like this:

- Start with the transactions source, which is a Kafka topic containing credit card transactions.

- Since the transaction event does not contain all the data required by the ML model, we need to enrich the stream with customer details.

- Now enrich the stream with merchant data.

- Using the MLflow enricher, invoke the ML model to determine if the transaction is fraudulent. See the image below for the enricher details.

- Log the result of the model inference along with the transaction information for further analysis.

- After filtering out the stream with transactions classified as fraudulent, send the transaction to another Kafka topic to be blocked. Transactions detected as fraudulent can be picked up by a downstream system.

MLflow model inference simplified with Nussaknacker ML runtime

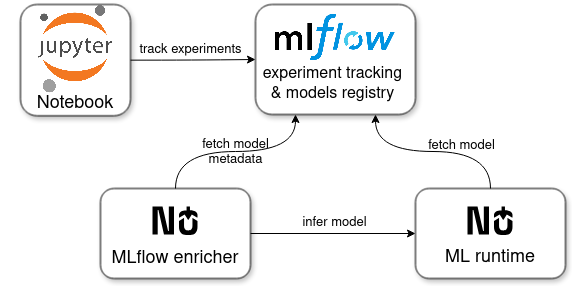

You might have noticed that we haven't explicitly mentioned how MLflow models can be invoked. So let’s go into that now. With Nussknacker’s MLflow enricher, you simply select the desired model and its version, populate all input parameters, and the scenario is ready to be deployed. This streamlined process is made possible by the Nussknacker ML runtime. The MLflow enricher not only discovers models using the MLflow model registry but also infers any model available in the registry thanks to the Nussknacker ML Runtime. Please refer to the diagram below for an overview of the interactions between the components.

This approach significantly simplifies the integration of business applications with machine learning models, even when they are built using entirely different technologies, such as Java and Python. These technologies don't naturally work together, so integrating them typically involves exposing the model as a REST service and having developers integrate it into the business application. Moreover, every time an ML engineer deploys a new version of a model, a developer must reintegrate it into the application. With Nussknacker, a domain expert can easily update the decision logic with a new version of the model.

In our case, preprocessing was necessary to enrich the original credit card transaction. This can easily be done using enrichers, as demonstrated in the example scenario. Additionally, you might also use Nussknacker to compute real-time features if they are required by a model. For example, you can aggregate transactions in a time window for a customer and a merchant, then run the model with a sum of transactions.

When we consider ML models exported to ONNX or PMML format, they have the great advantage of their native runtimes. This means that they can be executed “within” the same computer process as the Nussknacker scenario, which typically results in smaller latency and lower resource requirements. However, we found that there are some limitations to these formats. First, not all models and not all popular ML libraries are covered. Second, the export process is not straightforward; you have to tinker with various parameters when trying to export a model.

Improving the model

As with any machine learning project, the initial model is rarely the final product. After some time we realized that our scenario does not classify some transactions as fraud. On the other hand, we accept that some transactions will be flagged as fraudulent even though they are legitimate. Let's refine the model and dig into the Jupyter notebook. Click 👉 here to open it.

Once the new experiment has been recorded in MLflow, a new version of the model can be registered as follows. We can see that there are two versions of the model registered.

Making changes to the Nussknacker scenario

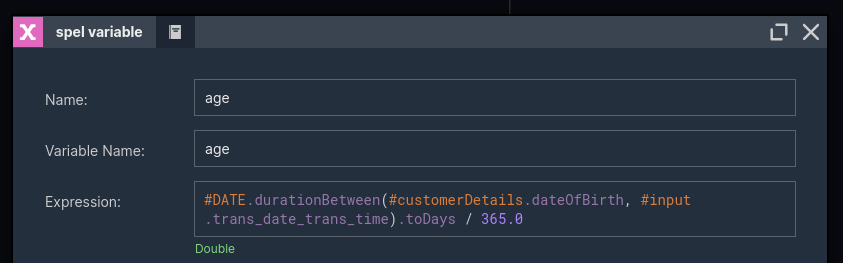

Having registered the new version of the model, we can easily select it in the enricher. Nevertheless, the new version has a changed input vector. Some variables were removed (trans_date_trans_time, dob) and some new ones appeared (age, trans_time), so we need to adjust the enricher parameters to the new model input.

Calculating age is easy thanks to Nussknacker's built-in helpers. See below:

Calculating transaction time is simple. Just extract the hour and minutes from the timestamp using SpEL. After the modification, the scenario has two new nodes which compute the newly added model's input parameters.

And that's it! The modified scenario is now ready for use. Even better, thanks to Nussknacker ML Runtime, we as scenario authors do not have to worry about deploying the new version of the machine learning model.

A/B testing and increasing the fraud detection accuracy

As we continue to refine our credit card fraud detection logic and model, there are several advanced techniques and enhancements that can be applied to improve the decisions made by the scenario.

Suppose the data scientist has registered a third version of the model, which should perform even better. However, we are satisfied with how the scenario is performing, and we do not want to risk switching to the new version. To address this issue, A/B testing technique can be easily implemented in the Nussknacker scenario. For example, 98% of all transactions could be classified with the second version of the model and only 2% with the third version. After some time, we can compare the results.

A/B testing is actually similar to ensemble models. Ensemble models combine multiple machine learning models to enhance inference of a more powerful model. By leveraging techniques such as:

- bagging (multiple models are inferred in parallel and a vote on the predictions is performed. The vote can be included in a Nussknacker scenario, e.g. the maximum of predicted risks)

- stacking (multiple models are inferred sequentially; the prediction of one model can be an input of the other model)

A scenario author can easily create these ensemble models, allowing each model to contribute to a more reliable overall prediction. This approach enables real-time decision-making with improved precision by drawing on the strengths of diverse models.

ML models inference can be much simpler

As we have outlined, you can quickly infer new machine learning models without the lengthy process typically required, making it easier to use them right away. There's no need to deal with the tedious work of embedding these models into your application, as the integration process is straightforward. If you are interested in our solution, please contact info@nussknacker.io. And do not forget to read our previous post for a general introduction to ML models inference with Nussknacker.

follow the author👉 Łukasz Jędrzejewski